Did you know that you can add and multiply orders? For any two order structures and , we can form the ordered sum and ordered product , and other natural operations, such as the disjoint sum , which make altogether an arithmetic of orders. We combine orders with these operations to make new orders, often with interesting properties. Let us explore the resulting algebra of orders!

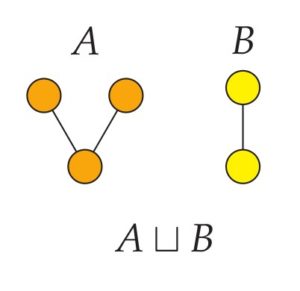

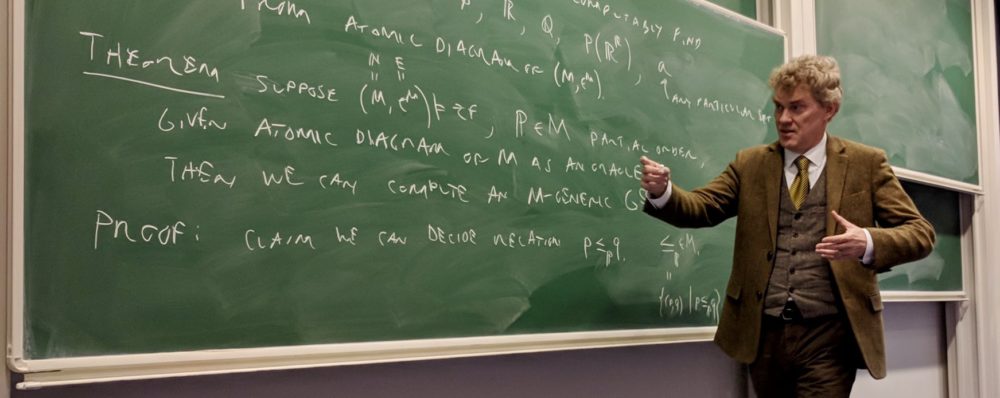

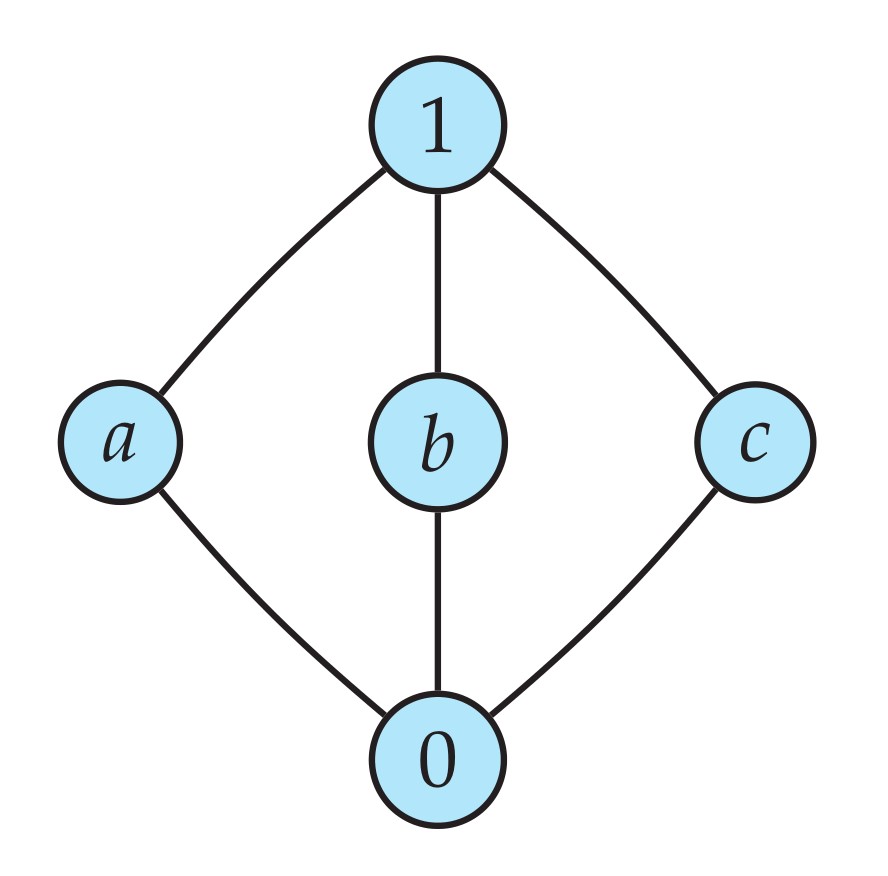

One of the most basic operations that we can use to combine two orders is the disjoint sum operation . This is the order resulting from placing a copy of adjacent to a copy of , side-by-side, forming a combined order with no instances of the order relation between the two parts. If is the orange -shaped order here and is the yellow linear order, for example, then is the combined order with all five nodes.

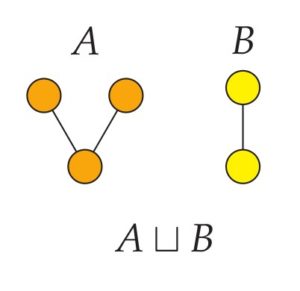

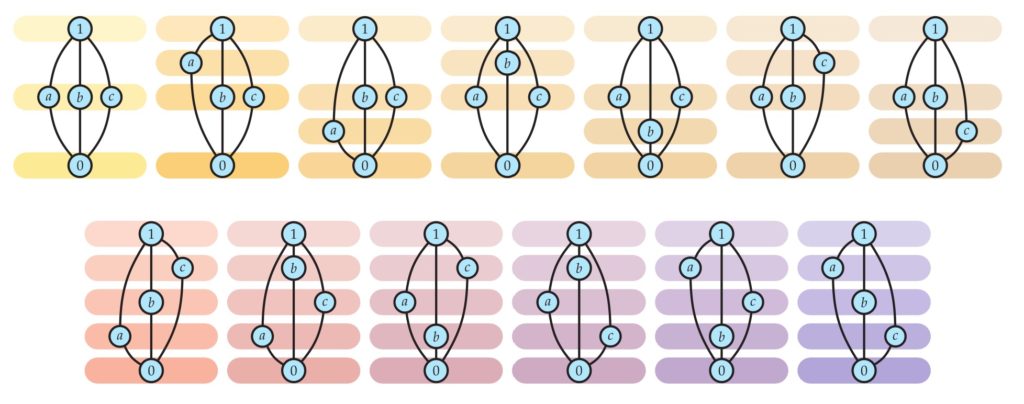

Another kind of addition is the ordered sum of two orders , which is obtained by placing a copy of above a copy of , as indicated here by adding the orange copy of and the yellow copy of . Also shown is the sum , with the summands reversed, so that we take below and on top. It is easy to check that the ordered sum of two orders is an order. One notices immediately, of course, that the resulting ordered sums and are not the same! The order has a greatest element, whereas has two maximal elements. So the ordered sum operation on orders is not commutative. Nevertheless, we shall still call it addition. The operation, which has many useful and interesting features, goes back at least to the 19th century with Cantor, who defined the addition of well orders this way.

Another kind of addition is the ordered sum of two orders , which is obtained by placing a copy of above a copy of , as indicated here by adding the orange copy of and the yellow copy of . Also shown is the sum , with the summands reversed, so that we take below and on top. It is easy to check that the ordered sum of two orders is an order. One notices immediately, of course, that the resulting ordered sums and are not the same! The order has a greatest element, whereas has two maximal elements. So the ordered sum operation on orders is not commutative. Nevertheless, we shall still call it addition. The operation, which has many useful and interesting features, goes back at least to the 19th century with Cantor, who defined the addition of well orders this way.

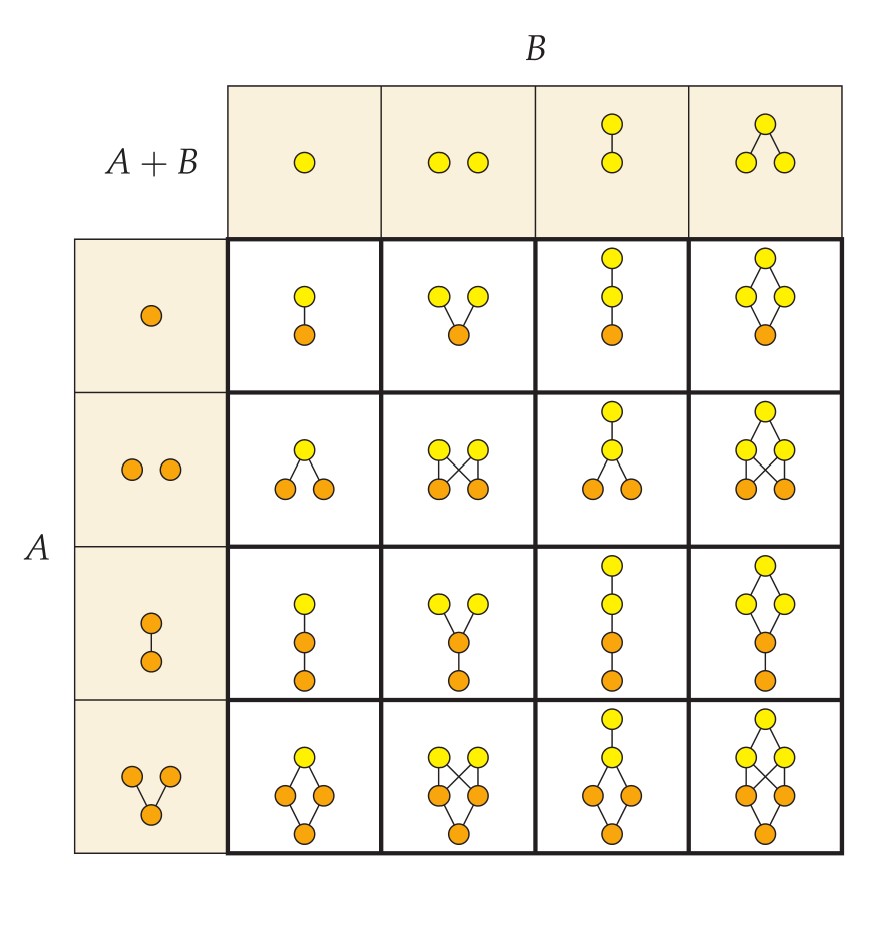

In order to illustrate further examples, I have assembled here an addition table for several simple finite orders. The choices for appear down the left side and those for at the top, with the corresponding sum displayed in each cell accordingly.

We can combine the two order addition operations, forming a variety of other orders this way.

The reader is encouraged to explore further how to add various finite orders using these two forms of addition. What is the smallest order that you cannot generate from using and ? Please answer in the comments.

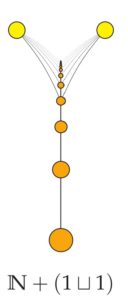

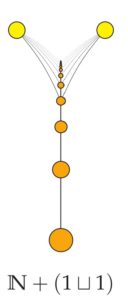

We can also add infinite orders. Displayed here, for example, is the order , the natural numbers wearing two yellow caps. The two yellow nodes at the top form a copy of , while the natural numbers are the orange nodes below. Every natural number (yes, all infinitely many of them) is below each of the two nodes at the top, which are incomparable to each other. Notice that even though we have Hasse diagrams for each summand order here, there can be no minimal Hasse diagram for the sum, because any particular line from a natural number to the top would be implied via transitivity from higher such lines, and we would need such lines, since they are not implied by the lower lines. So there is no minimal Hasse diagram.

We can also add infinite orders. Displayed here, for example, is the order , the natural numbers wearing two yellow caps. The two yellow nodes at the top form a copy of , while the natural numbers are the orange nodes below. Every natural number (yes, all infinitely many of them) is below each of the two nodes at the top, which are incomparable to each other. Notice that even though we have Hasse diagrams for each summand order here, there can be no minimal Hasse diagram for the sum, because any particular line from a natural number to the top would be implied via transitivity from higher such lines, and we would need such lines, since they are not implied by the lower lines. So there is no minimal Hasse diagram.

This order happens to illustrate what is called an exact pair, which occurs in an order when a pair of incomparable nodes bounds a chain below, with the property that any node below both members of the pair is below something in the chain. This phenomenon occurs in sometimes unexpected contexts—any countable chain in the hierarchy of Turing degrees in computability theory, for example, admits an exact pair.

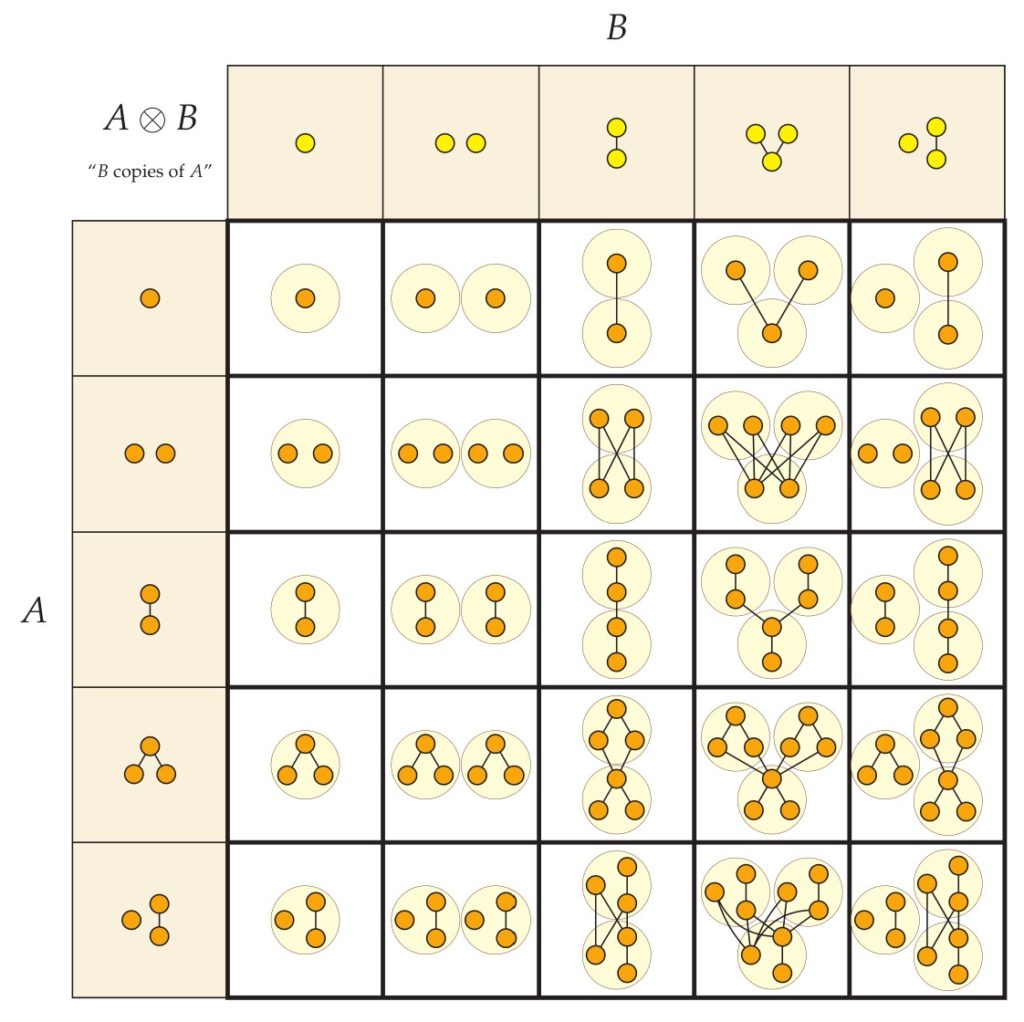

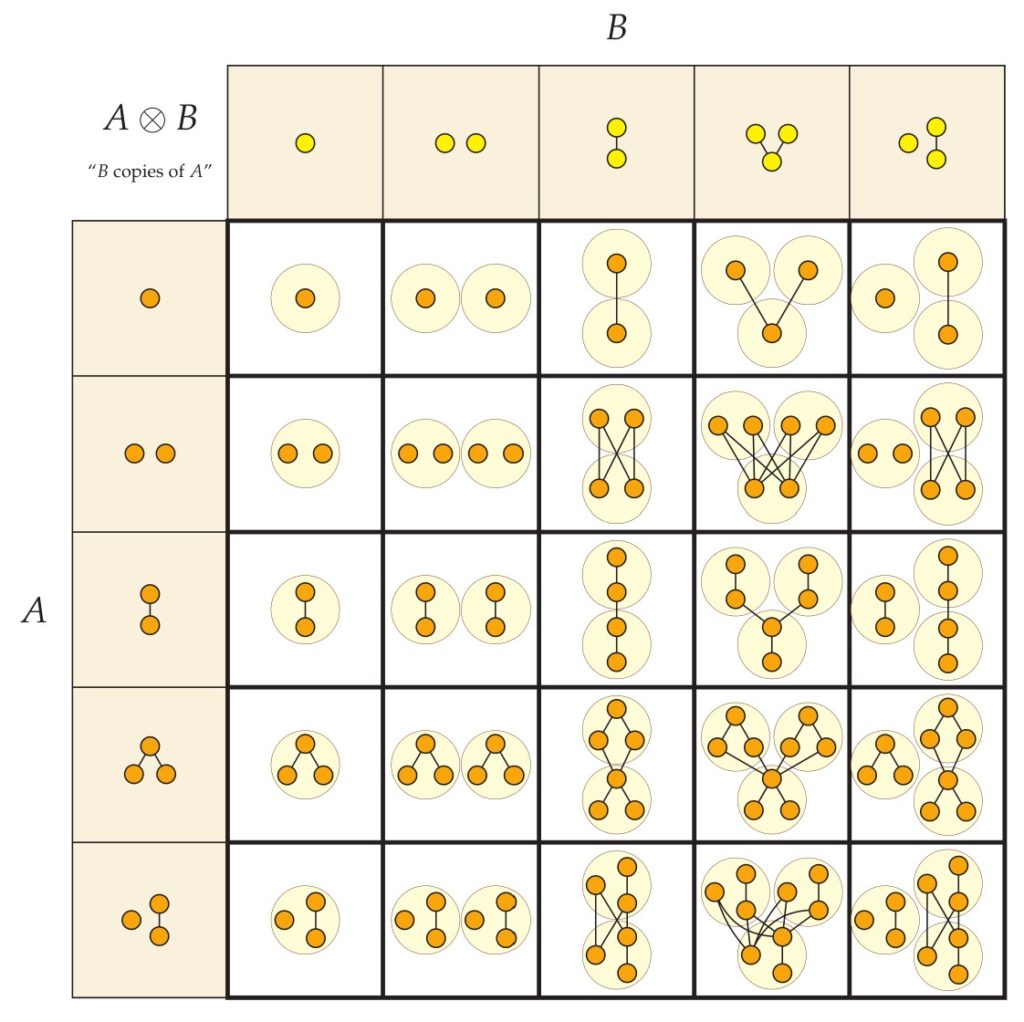

Let us turn now to multiplication. The ordered product is the order resulting from having many copies of . That is, we replace each node of with an entire copy of the order. Within each of these copies of , the order relation is just as in , but the order relation between nodes in different copies of , we follow the relation. It is not difficult to check that indeed this is an order relation. We can illustrate here with the same two orders we had earlier.

In forming the ordered product , in the center here, we take the two yellow nodes of , shown greatly enlarged in the background, and replace them with copies of . So we have ultimately two copies of , one atop the other, just as has two nodes, one atop the other. We have added the order relations between the lower copy of and the upper copy, because in the lower node is related to the upper node. The order consists only of the six orange nodes—the large highlighted yellow nodes of here serve merely as a helpful indication of how the product is formed and are not in any way part of the product order .

Similarly, with , at the right, we have the three enlarged orange nodes of in the background, which have each been replaced with copies of . The nodes of each of the lower copies of are related to the nodes in the top copy, because in the two lower nodes are related to the upper node.

I have assembled a small multiplication table here for some simple finite orders.

So far we have given an informal account of how to add and multiply ordered ordered structures. So let us briefly be a little more precise and formal with these matters.

In fact, when it comes to addition, there is a slightly irritating matter in defining what the sums and are exactly. Specifically, what are the domains? We would like to conceive of the domains of and simply as the union the domains of and —we’d like just to throw the two domains together and form the sums order using that combined domain, placing on the part and on the part (and adding relations from the to the part for ). Indeed, this works fine when the domains of and are disjoint, that is, if they have no points in common. But what if the domains of and overlap? In this case, we can’t seem to use the union in this straightforward manner. In general, we must disjointify the domains—we take copies of and , if necessary, on domains that are disjoint, so that we can form the sums and on the union of those nonoverlapping domains.

What do we mean precisely by “taking a copy” of an ordered structure ? This way of talking in mathematics partakes in the philosophy of structuralism. We only care about our mathematical structures up to isomorphism, after all, and so it doesn’t matter which isomorphic copies of and we use; the resulting order structures will be isomorphic, and similarly for . In this sense, we are defining the sum orders only up to isomorphism.

Nevertheless, we can be definite about it, if only to verify that indeed there are copies of and available with disjoint domains. So let us construct a set-theoretically specific copy of , replacing each individual in the domain of with , for example, and replacing the elements in the domain of with . If “orange” is a specific object distinct from “yellow,” then these new domains will have no points in common, and we can form the disjoint sum by using the union of these new domains, placing the order on the orange objects and the order on the yellow objects.

Although one can use this specific disjointifying construction to define what and mean as specific structures, I would find it to be a misunderstanding of the construction to take it as a suggestion that set theory is anti-structuralist. Set theorists are generally as structuralist as they come in mathematics, and in light of Dedekind’s categorical account of the natural numbers, one might even find the origin of the philosophy of structuralism in set theory. Rather, the disjointifying construction is part of the general proof that set theory abounds with isomorphic copies of whatever mathematical structure we might have, and this is part of the reason why it serves well as a foundation of mathematics for the structuralist. To be a structuralist means not to care which particular copy one has, to treat one’s mathematical structures as invariant under isomorphism.

But let me mention a certain regrettable consequence of defining the operations by means of a specific such disjointifying construction in the algebra of orders. Namely, it will turn out that neither the disjoint sum operation nor the ordered sum operation, as operations on order structures, are associative. For example, if we use to represent the one-point order, then means the two-point side-by-side order, one orange and one yellow, but really what we mean is that the points of the domain are , where the original order is on domain . The order then means that we take an orange copy of that order plus a single yellow point. This will have domain

The order , in contrast, means that we take a single orange point plus a yellow copy of , leading to the domain

These domains are not the same! So as order structures, the order is not identical with , and therefore the disjoint sum operation is not associative. A similar problem arises with and .

But not to worry—we are structuralists and care about our orders here only up to isomorphism. Indeed, the two resulting orders are isomorphic as orders, and more generally, is isomorphic to for any orders , , and , and similarly with , as discussed with the theorem below. Furthermore, the order isomorphism relation is a congruence with respect to the arithmetic we have defined, which means that is isomorphic to whenever and are respectively isomorphic to and , and similarly with and . Consequently, we can view these operations as associative, if we simply view them not as operations on the order structures themselves, but on their order-types, that is, on their isomorphism classes. This simple abstract switch in perspective restores the desired associativity. In light of this, we are free to omit the parentheses and write and , if care about our orders only up to isomorphism. Let us therefore adopt this structuralist perspective for the rest of our treatment of the algebra of orders.

Let us give a more precise formal definition of , which requires no disjointification. Specifically, the domain is the set of pairs , and the order is defined by if and only if , or and . This order is known as the reverse lexical order, since we are ordering the nodes in the dictionary manner, except starting from the right letter first rather than the left as in an ordinary dictionary. One could of course have defined the product using the lexical order instead of the reverse lexical order, and this would give the meaning of “ copies of .” This would be a fine alternative and in my experience mathematicians who rediscover the ordered product on their own often tend to use the lexical order, which is natural in some respects. Nevertheless, there is a huge literature with more than a century of established usage with the reverse lexical order, from the time of Cantor, who defined ordinal multiplication as copies of . For this reason, it seems best to stick with the reverse lexical order and the accompanying idea that means “ copies of .” Note also that with the reverse lexical order, we shall be able to prove left distributivity , whereas with the lexical order, one will instead have right distributivity .

Let us begin to prove some basic facts about the algebra of orders.

Theorem. The following identities hold for orders , , and .

- Associativity of disjoint sum, ordered sum, and ordered product.

- Left distributivity of product over disjoint sum and ordered sum.

In each case, these identities are clear from the informal intended meaning of the orders. For example, is the order resulting from having a copy of , and above it a copy of , which is a copy of and a copy of above it. So one has altogether a copy of , with a copy of above that and a copy of on top. And this is the same as , so they are isomorphic.

One can also aspire to give a detailed formal proof verifying that our color-coded disjointifying process works as desired, and the reader is encouraged to do so as an exercise. To my way of thinking, however, such a proof offers little in the way of mathematical insight into algebra of orders. Rather, it is about checking the fine print of our disjointifying process and making sure that things work as we expect. Several of the arguments can be described as parenthesis-rearranging arguments—one extracts the desired information from the structure of the domain order and puts that exact same information into the correct form for the target order.

For example, if we have used the color-scheme disjointifying process described above, then the elements of each have one of the following forms, where , , and :

We can define the color-and-parenthesis-rearranging function to put them into the right form for as follows:

In each case, we will preserve the order, and since the orders are side-by-side, the cases never interact in the order, and so this is an isomorphism.

Similarly, for distributivity, the elements of have the two forms:

where , , and . Again we can define the desired ismorphism by putting these into the right form for as follows:

And again, this is an isomorphism, as desired.

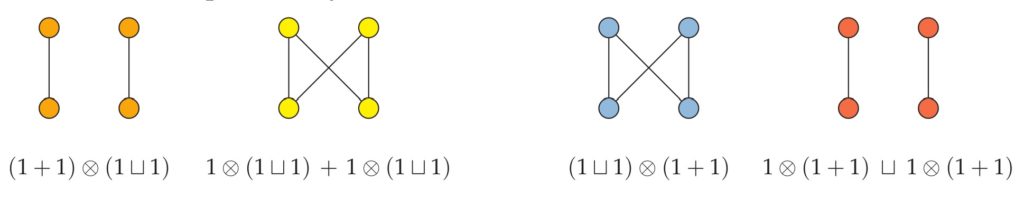

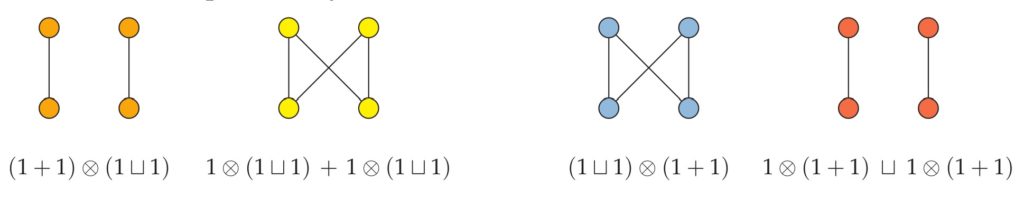

Since order multiplication is not commutative, it is natural to inquire about the right-sided distributivity laws:

Unfortunately, however, these do not hold in general, and the following instances are counterexamples. Can you see what to take as , , and ? Please answer in the comments.

Theorem.

- If and are linear orders, then so are and .

- If and are nontrivial linear orders and both are endless, then is endless; if at least one of them is endless, then is endless.

- If is an endless dense linear order and is linear, then is an endless dense linear order.

- If is an endless discrete linear order and is linear, then is an endless discrete linear order.

Proof. If both and are linear orders, then it is clear that is linear. Any two points within the copy are comparable, and any two points within the copy, and every point in the copy is below any point in the copy. So any two points are comparable and thus we have a linear order. With the product , we have many copies of , and this is linear since any two points within one copy of are comparable, and otherwise they come from different copies, which are then comparable since is linear. So is linear.

For statement (2), we know that and are nontrivial linear orders. If both and are endless, then clearly is endless, since every node in has something below it and every node in has something above it. For the product , if is endless, then every node in any copy of has nodes above and below it, and so this will be true in ; and if is endless, then there will always be higher and lower copies of to consider, so again is endless, as desired.

For statement (3), assume that is an endless dense linear and that is linear. We know from (1) that is a linear order. Suppose that in this order. If and live in the same copy of , then there is a node between them, because is dense. If occurs in one copy of and in another, then because is endless, there will a node above in its same copy, leading to as desired. (Note: we don’t need to be dense.)

For statement (4), assume instead that is an endless discrete linear order and is linear. We know that is a linear order. Every node of lives in a copy of , where it has an immediate successor and an immediate predecessor, and these are also immediate successor and predecessor in . From this, it follows also that is endless, and so it is an endless discrete linear order.

The reader is encouraged to consider as an exercise whether one can drop the “endless” hypotheses in the theorem. Please answer in the comments.

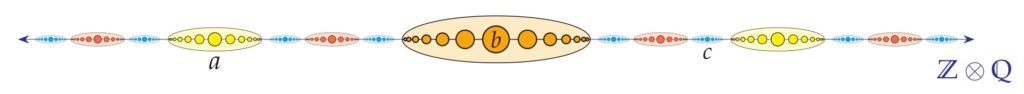

Theorem. The endless discrete linear orders are exactly those of the form for some linear order .

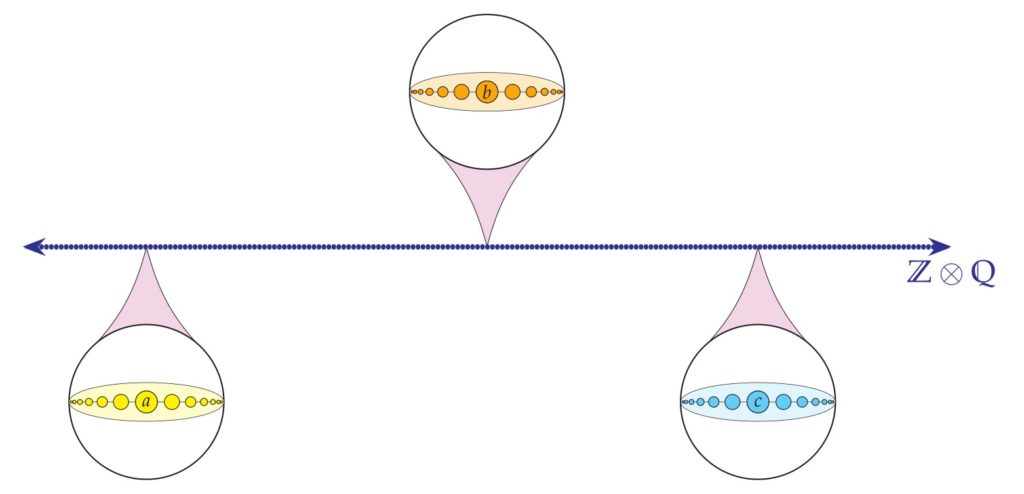

Proof. If is a linear order, then is an endless discrete linear order by the theorem above, statement (4). So any order of this form has the desired feature. Conversely, suppose that is an endless discrete linear order. Define an equivalence relation for points in this order by which if and only and are at finite distance, in the sense that there are only finitely many points between them. This relation is easily seen to be reflexive, transitive and symmetric, and so it is an equivalence relation. Since is an endless discrete linear order, every object in the order has an immediate successor and immediate predecessor, which remain -equivalent, and from this it follows that the equivalence classes are each ordered like the integers , as indicated by the figure here.

The equivalence classes amount to a partition of into disjoint segments of order type , as in the various colored sections of the figure. Let be the induced order on the equivalence classes. That is, the domain of consists of the equivalence classes , which are each a chain in the original order, and we say just in case . This is a linear order on the equivalence classes. And since is copies of its equivalence classes, each of which is ordered like , it follows that is isomorphic to , as desired.

(Interested readers are advised that the argument above uses the axiom of choice, since in order to assemble the isomorphism of with , we need in effect to choose a center point for each equivalence class.)

If we consider the integers inside the rational order , it is clear that we can have a discrete suborder of a dense linear order. How about a dense suborder of a discrete linear order?

Question. Is there a discrete linear order with a suborder that is a dense linear order?

What? How could that happen? In my experience, mathematicians first coming to this topic often respond instinctively that this should be impossible. I have seen sophisticated mathematicians make such a pronouncement when I asked the audience about it in a public lecture. The fundamental nature of a discrete order, after all, is completely at odds with density, since in a discrete order, there is a next point up and down, and a next next point, and so on, and this is incompatible with density.

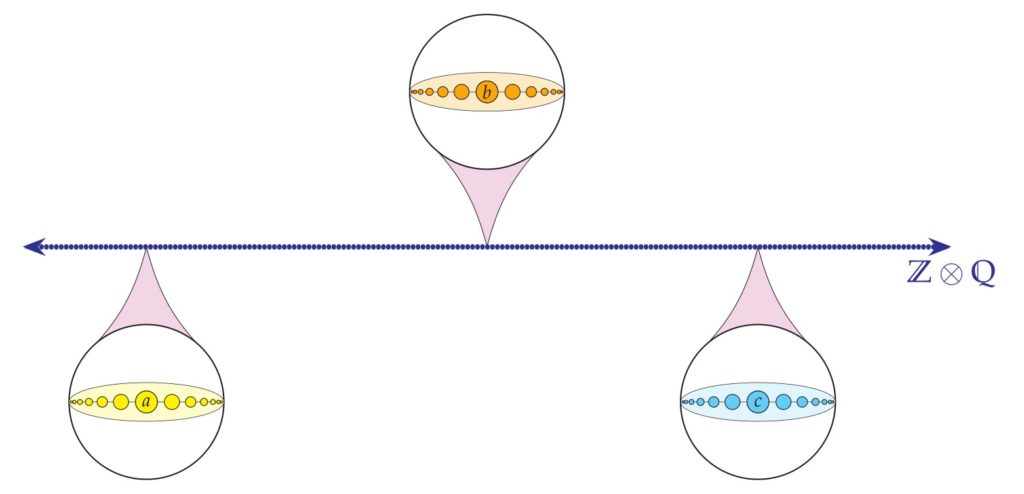

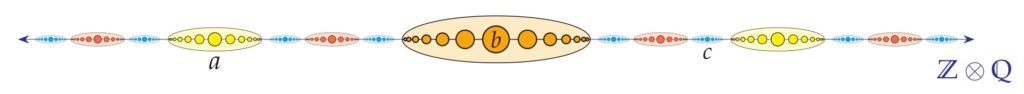

Yet, surprisingly, the answer is Yes! It is possible—there is a discrete order with a suborder that is densely ordered. Consider the extremely interesting order , which consists of many copies of , laid out here increasing from left to right. Each tiny blue dot is a rational number, which has been replaced with an entire copy of the integers, as you can see in the magnified images at , , and .

The order is quite subtle, and so let me also provide an alternative presentation of it. We have many copies of , and those copies are densely ordered like , so that between any two copies of is another one, like this:

Perhaps it helps to imagine that the copies of are getting smaller and smaller as you squeeze them in between the larger copies. But you can indeed always fit another copy of between, while leaving room for the further even tinier copies of to come.

The order is discrete, in light of the theorem characterizing discrete linear orders. But also, this is clear, since every point of lives in its local copy of , and so has an immediate successor and predecessor there. Meanwhile, if we select exactly one point from each copy of , the of each copy, say, then these points are ordered like , which is dense. Thus, we have proved:

Theorem. The order is a discrete linear order having a dense linear order as a suborder.

One might be curious now about the order , which is many copies of . This order, however, is a countable endless dense linear order, and therefore is isomorphic to itself.

This material is adapted from my book-in-progress, Topics in Logic, drawn from Chapter 3 on Relational Logic, which incudes an extensive section on order theory, of which this is an important summative part.

This will be a short lecture series given at the conclusion of the graduate logic class in the

This will be a short lecture series given at the conclusion of the graduate logic class in the