In my logic course this semester, as a part of the section on the logic of games, we considered the pirate treasure division problem.

Imagine a pirate ship with a crew of fearsome, perfectly logical pirates and a treasure of 100 gold coins to be divided amongst them. How shall they do it? They have long agreed upon the pirate treasure division procedure: The pirates are linearly ordered by rank, with the Captain, the first Lieutenant, the second Lieutenant and so on down the line; but let us simply refer to them as Pirate 1, Pirate 2, Pirate 3 and so on. Pirate 9 is swabbing the decks in preparation. For the division procedure, all the pirates assemble on deck, and the lowest-ranking pirate mounts the plank. Facing the other pirates, she proposes a particular division of the gold — so-and-so many gold pieces to the captain, so-and-so many pieces to Pirate 2 and so on. The pirates then vote on the plan, including the pirate on the plank, and if a strict majority of the pirates approve of the plan, then it is adopted and that is how the gold is divided. But if the pirate’s plan is not approved by a pirate majority, then regretfully she must walk the plank into the sea (and her death) and the procedure continues with the next-lowest ranking pirate, who of course is now the lowest-ranking pirate.

Suppose that you are pirate 10: what plan do you propose? Would you think it is a good idea to propose that you get to keep 94 gold pieces for yourself, with the six remaining given to a few of the other pirates? In fact, you can propose just such a thing, and if you do it correctly, your plan will pass!

Before explaining why, let me tell you a little more about the pirates. I mentioned that the pirates are perfectly logical, and not only that, they have the common knowledge that they are all perfectly logical. In particular, in their reasoning they can rely on the fact that the other pirates are logical, and that the other pirates know that they are all logical and that they know that, and so on.

Furthermore, it is common knowledge amongst the pirates that they all share the same pirate value system, with the following strictly ordered list of priorities:

Pirate value system:

- Stay alive.

- Get gold.

- Cause the death of other pirates.

- Arrange that other’s gold goes to the most senior pirates.

That is, at all costs, each pirate would prefer to avoid death, and if alive, to get as much gold as possible, but having achieved that, would prefer that as many other pirates die as possible (but not so much as to give up even one gold coin for additional deaths), and if all other things are equal, would prefer that whatever gold was not gotten for herself, that it goes as much as possible to the most senior pirates, for the pirates are, in their hearts, conservative people.

So, what plan should you propose as Pirate 10? Well, naturally, the pirates will consider Pirate 10’s plan in light of the alternative, which will be the plan proposed by Pirate 9, which will be compared with the plan of Pirate 8 and so on. Thus, it seems we should propagate our analysis from the bottom, working backwards from what happens with a very small number of pirates.

One pirate. If there is only one pirate, the captain, then she mounts the plank, and clearly she should propose “Pirate 1 gets all the gold”, and she should vote in favor of this plan, and so Pirate 1 gets all the gold, as anyone would have expected.

Two pirates. If there are exactly two pirates, then Pirate 2 will mount the plank, and what will she propose? She needs a majority of the two pirates, which means she must get the captain to vote for her plan. But no matter what plan she proposes, even if it is that all the gold should go to the captain, the captain will vote against the plan, since if Pirate 2 is killed, then the captain will get all the gold anyway, and because of pirate value 3, she would prefer that Pirate 2 is killed off. So Pirate 2’s plan will not be approved by the captain, and so unfortunately, Pirate 2 will walk the plank.

Three pirates. If there are three pirates, then what will Pirate 3 propose? Well, she needs only two votes, and one of them will be her own. So she must convince either Pirate 1 or Pirate 2 to vote for her plan. But actually, Pirate 2 will have a strong incentive to vote for the plan regardless, since otherwise Pirate 2 will be in the situation of the two-pirate case, which ended with Pirate 2’s death. So Pirate 3 can count on Pirate 2’s vote regardless, and so Pirate 3 will propose: Pirate 3 gets all the gold! This will be approved by both Pirate 2 and Pirate 3, a majority, and so with three pirates, Pirate 3 gets all the gold.

Four pirates. Pirate 4 needs to have three votes, so she needs to get two of the others to vote for her plan. She notices that if she is to die, then Pirates 1 and 2 will get no gold, and so she realizes that if she offers them each one gold coin, they will prefer that, because of the pirate value system. So Pirate 4 will propose to give one gold coin each to Pirates 1 and 2, and 98 gold coins to herself. This plan will pass with the votes of 1, 2 and 4.

Five pirates. Pirate 5 needs three votes, including her own. She can effectively buy the vote of Pirate 3 with one gold coin, since Pirate 3 will otherwise get nothing in the case of four pirates. And she needs one additional vote, that of Pirate 1 or 2, which she can get by offering two gold coins. Because of pirate value 4, she would prefer that the coins go to the highest ranking pirate, so she offers the plan: two coins to Pirate 1, nothing to pirate 2, one coin to pirate 3, nothing to Pirate 4 and 97 coins to herself. This plan will pass with the votes of 1, 3 and 5.

Six pirates. Pirate 6 needs four votes, and she can buy the votes of Pirates 2 and 4 with one gold coin each, and then two gold coins to Pirate 3, which is cheaper than the alternatives. So she proposes: one coin each to 2 and 4, two coins to 3 and 96 coins for herself, and this passes with the votes of 2, 3, 4 and 6.

Seven pirates. Pirate 7 needs four votes, and she can buy the votes of Pirates 1 and 5 with only one coin each, since they get nothing in the six-pirate case. By offering two coins to Pirate 2, she can also get another vote (and she prefers to give the extra gold to Pirate 2 than to other pirates in light of the pirate values).

Eight pirates. Pirate 8 needs five votes, and she can buy the votes of Pirates 3, 4 and 6 with one coin each, and ensure another vote by giving two coins to Pirate 1, keeping the other 95 coins for herself. With her own vote, this plan will pass.

Nine pirates. Pirate 9 needs five votes, and she can buy the votes of Pirates 2, 5 and 7 with one coin each, with two coins to Pirate 3 and her own vote, the plan will pass.

Ten pirates. In light of the division offered by Pirate 9, we can now see that Pirate 10 can ensure six votes by proposing to give one coin each to Pirates 1, 4, 6 and 8, two coins to Pirate 2, and the remaining 94 coins for herself. This plan will pass with those pirates voting in favor (and herself), because they each get more gold this way than they would under the plan of Pirate 9.

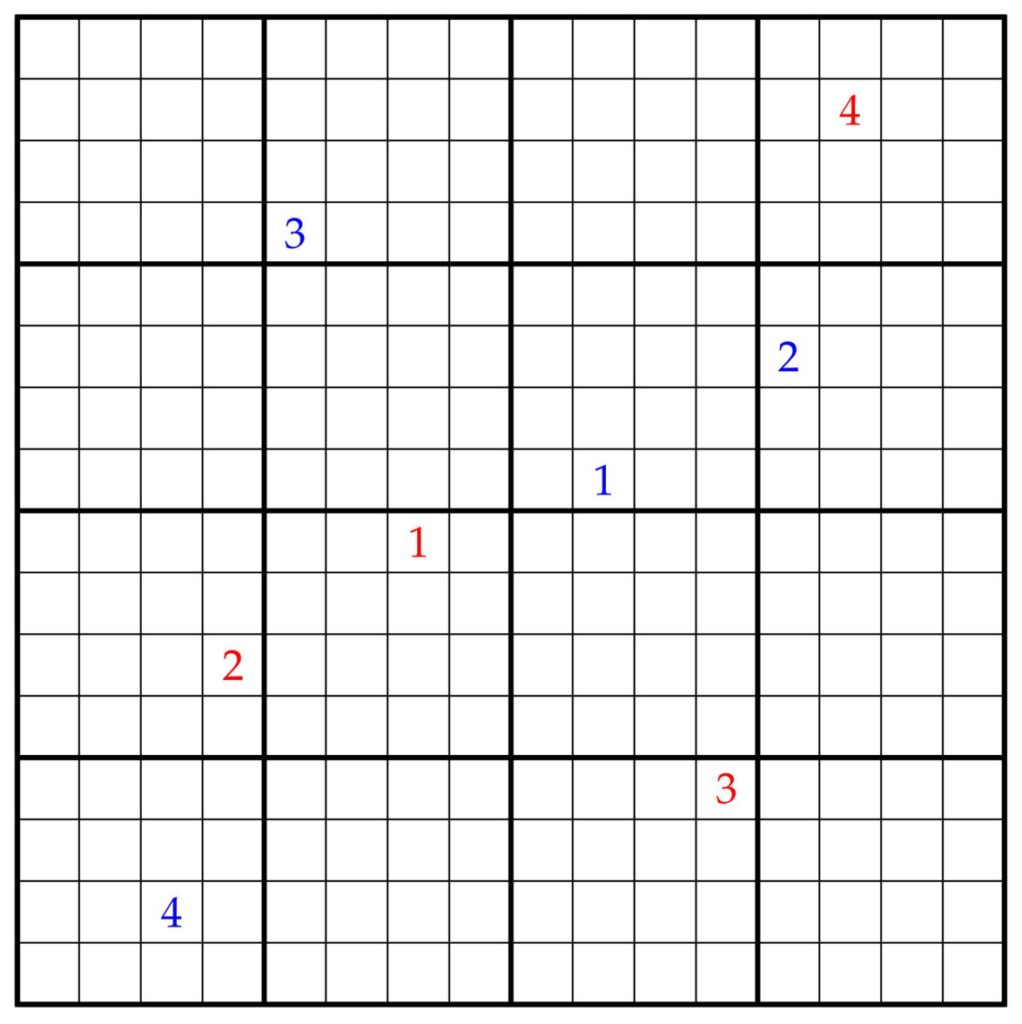

We can summarize the various proposals in a table, where the row corresponds to the proposal of Pirate .

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

| One pirate |

100 |

| Two pirates |

* |

X |

| Three pirates |

0 |

0 |

100 |

| Four pirates |

1 |

1 |

0 |

98 |

| Five pirates |

2 |

0 |

1 |

0 |

97 |

| Six pirates |

0 |

1 |

2 |

1 |

0 |

96 |

| Seven pirates |

1 |

2 |

0 |

0 |

1 |

0 |

96 |

| Eight pirates |

2 |

0 |

1 |

1 |

0 |

1 |

0 |

95 |

| Nine pirates |

0 |

1 |

2 |

0 |

1 |

0 |

1 |

0 |

95 |

| Ten pirates |

1 |

2 |

0 |

1 |

0 |

1 |

0 |

1 |

0 |

94 |

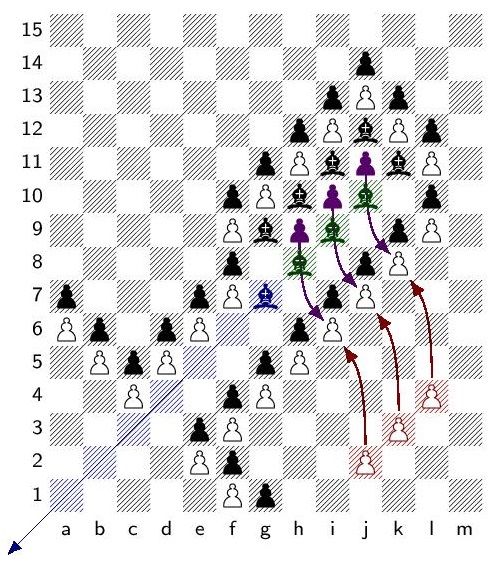

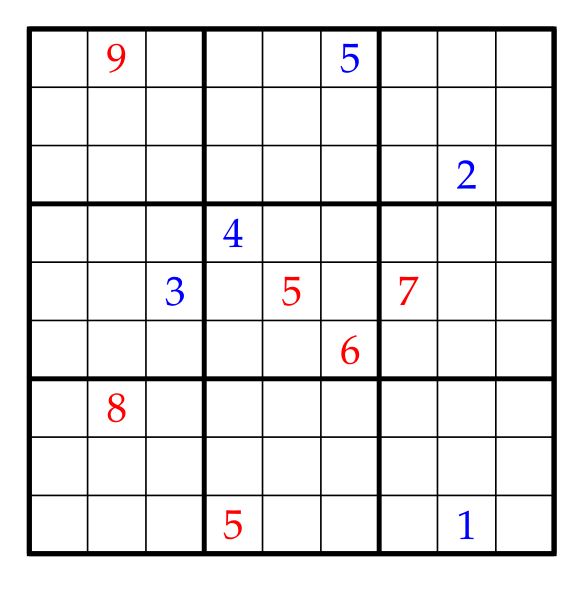

There are a few things to notice, which we can use to deduce how the pattern will continue. Notice that in each row beyond the third row, the number of pirates that get no coins is almost half (the largest integer strictly less than half), exactly one pirate gets two coins, and the remainder get one coin, except for the proposer herself, who gets all the rest. This pattern is sustainable for as long as there is enough gold to implement it, because each pirate can effectively buy the votes of the pirates getting under the alternative plan with one fewer pirate, and this will be at most one less than half of the previous number; then, she can buy one more vote by giving two coins to one of the pirates who got only one coin in the alternative plan; and with her own vote this will be half plus one, which is a majority. We can furthermore observe that by the pirate value system, the two coins will always go to either Pirate 1, 2 or 3, since one of these will always be the top-ranked pirate having one coin on the previous round. They each cycle with the pattern of 0 coins, one coin, two coins in the various proposals. At least until the gold becomes limited, all the other pirates from Pirate 4 onwards will alternate between zero coins and one coin with each subsequent proposal, and Pirate will always get zero from Pirate .

For this reason, we can see that the pattern continues upward until at least Pirate 199, whose proposal will follow the pattern:

199 Pirates: 1 2 0 0 1 0 1 0 1 0 1 0 1 1 0 1 0 0

It is with Pirate 199, specifically, that for the first time it takes all one hundred coins to buy the other votes, since she must give ninety-eight pirates one coin each, and two coins to Pirate 2 in order to have one hundred votes altogether, including her own, leaving no coins left over for herself.

For this reason, Pirate 200 will have a successful proposal, since she no longer needs to spend two coins for one vote, as the proposal of Pirate 199 has one hundred pirates getting zero. So Pirate 200 can get 100 votes by proposing one coin to everyone who would get zero from 199, plus her own vote, for a majority of 101 votes.

200 pirates: 0 0 1 1 0 1 0 1 0 1 0 0 1 0 1 1 0

Pirate 201 also needs 101 votes, which she can get by giving all the zeros of the 200 case one coin each, plus her own vote. The unfortunate Pirate 202, however, needs 102 votes, and this will not be possible, since she has only 100 coins, and so Pirate 202 will die. The interesting thing to notice next is that Pirate 203 will therefore be able to count on the vote of Pirate 202 without paying any gold for it, and so since she needs only 100 additional votes (after her own vote and Pirate 202’s vote), she will be able to buy 100 votes for one coin each. Pirate 204 will again be one coin short, and so she will die. Although Pirate 205 will be able to count on that one additional free vote, this will be insufficient to gain a passing proposal, because she will be able to buy one hundred votes with the coins, plus her own vote and the free vote of Pirate 204, making 102 votes altogether, which is not a majority. Similarly, Pirate 206 will fall short, because even with her vote and the free votes of 204 and 205, she will be able to get at most 103 votes, which is not a majority. Thus, Pirate 207 will be able to count on the votes of Pirates 204, 205, and 206, which with her own vote and 100 more votes gotten by giving one coin each to the pirates who would otherwise get nothing, we can obtain 104 votes, which is a majority.

The reader is encouraged to investigate further to see how the pattern continues. It is a fun problem to work out! What emerges is the phenomenon by which longer and longer sequences of pirates in a row find themselves unable to make a winning proposal, and then suddenly a pirate is able to survive by counting on their votes.

It is very interesting also to work out what happens when there is a very small number of coins. For example, if there is only one gold coin, then already Pirate 4 is unable to make a passing proposal, since she can buy only one other vote, and with her own this will make only two votes, falling short of a majority. With only one coin, Pirate 5 will survive by buying a vote from Pirate 1 and counting on the vote of Pirate 4 and her own vote, for a majority.

Even the case of zero coins is interesting to think about! In this case, there is no gold to distribute, and so the voting is really just about whether the pirate should walk the plank or not. If only one pirate, she will live. Pirate 2 will die, since Pirate 1 will vote against. But for that reason, Pirate 2 will vote in favor of Pirate 3, who will live. The pattern that emerges is:

lives, dies, lives, dies, dies, dies, lives, dies, dies, dies, dies, dies, dies, dies, lives, ….

After each successful proposal, where the pirates lives, for subsequently larger numbers of pirates, there must be many deaths in a row in order for the proposal to count on enough votes. So after each “lives” in the pattern, you have to double the length with many “dies” in a row, before there will be enough votes to support the next pirate who lives.

See also the Pirate Game entry on Wikipedia, which is a slightly different formulation of the puzzle, since tie-votes are effectively counted as success in that variation. For this reason, the outcomes are different in that variation. I prefer the strict-majority variation, since I find it interesting that one must sometimes use two gold coins to gain the majority, and also because the death of Pirate 2 arrives right away in an interesting way, rather than having to wait for 200 or more pirates as with the plurality version.

Another (inessential) difference in presentation is that in the other version of the puzzle, they have the captain on the plank first, and then always the highest-ranking pirate making the proposal, rather than the lowest-ranking pirate. This corresponds simply to inverting the ranking, and so it doesn’t change the results.

The puzzle appears to have been around for some time, but I am unsure of the exact provenance. Ian Stewart wrote a popular 1998 article for Scientific American analyzing the patterns that arise when the number of pirates is large in comparison with the number of gold pieces.

![By Avoini (Own work) [CC BY-SA 3.0 (http://creativecommons.org/licenses/by-sa/3.0)], via Wikimedia Commons Reef_shark_beneath_a_school_of_jack_fish 4096](http://jdh.hamkins.org/wp-content/uploads/2017/03/Reef_shark_beneath_a_school_of_jack_fish-4096-300x200.jpg)

This is joint work with Victoria Gitman. See our article,

This is joint work with Victoria Gitman. See our article,

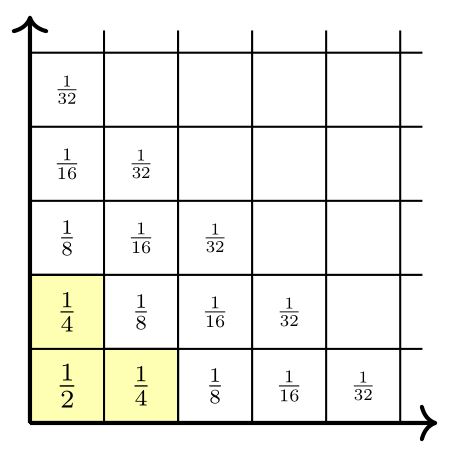

Shall we have a game of transfinite Nim? One of us sets up finitely many piles of wooden blocks, each pile having some ordinal height, possibly transfinite, and the other of us decides who shall make the first move. Taking turns, we each successively remove a top part of any one pile of our choosing, making it strictly shorter. Whoever takes the very last block wins. (It is fine to remove an entire pile on a turn or to remove blocks from a different pile on a later turn.)

Shall we have a game of transfinite Nim? One of us sets up finitely many piles of wooden blocks, each pile having some ordinal height, possibly transfinite, and the other of us decides who shall make the first move. Taking turns, we each successively remove a top part of any one pile of our choosing, making it strictly shorter. Whoever takes the very last block wins. (It is fine to remove an entire pile on a turn or to remove blocks from a different pile on a later turn.)