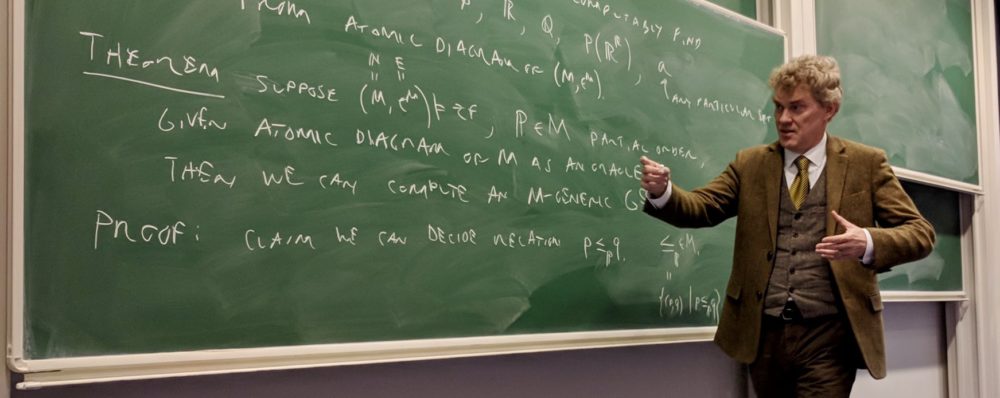

This will be a talk for the Logic Seminar at the Mathematical Institute of the University of Oxford, 29 May 2025 5pm Andrew Wiles Building.

Abstract. For a given computably enumerable set

This will be a talk for the Logic Seminar at the Mathematical Institute of the University of Oxford, 29 May 2025 5pm Andrew Wiles Building.

Abstract. For a given computably enumerable set

[bibtex key=”HamkinsMillerWilliams:Forcing-as-a-computational-process”]

Abstract. We investigate how set-theoretic forcing can be seen as a computational process on the models of set theory. Given an oracle for information about a model of set theory

I shall speak for the Oxford and Cambridge Club, in a joint event hosted by Maths and Science Group and the Military History Group, an evening (6 June 2019) with dinner and talks on the theme of the Enigma and Code breaking.

Abstract: I shall describe Alan Turing’s transformative philosophical analysis of the nature of computation, including his argument that some mathematical questions must inevitably remain beyond our computational capacity to answer.

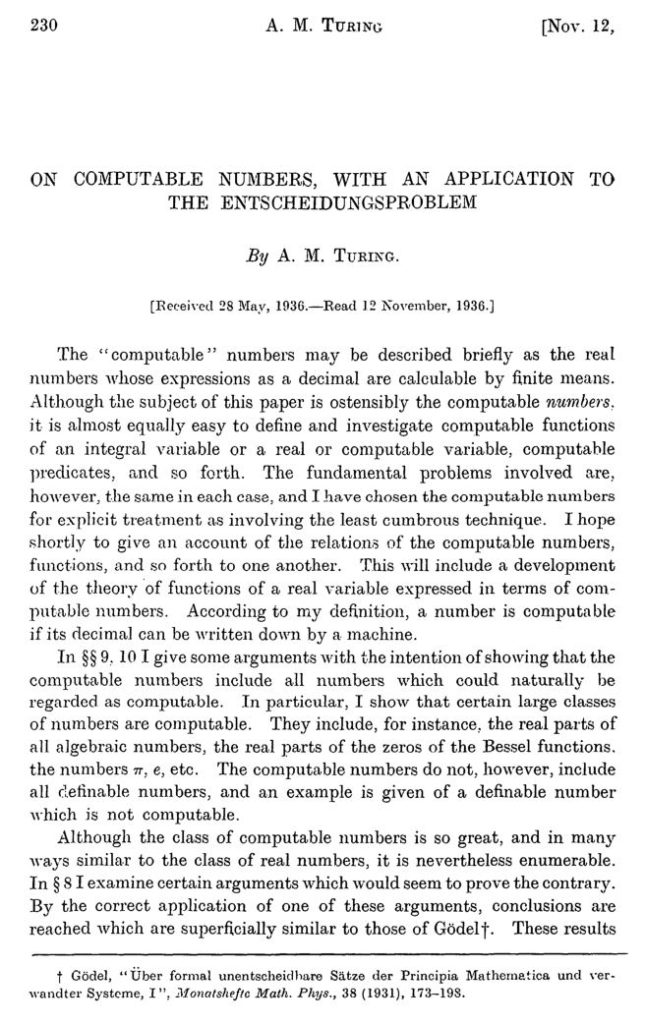

The talk will highlight ideas from Alan Turing’s phenomenal 1936 paper on computable numbers:

I have been reading Alan Turing’s paper, On computable numbers, with an application to the entsheidungsproblem, an amazing classic, written by Turing while he was a student in Cambridge. This is the paper in which Turing introduces and defines his Turing machine concept, deriving it from a philosophical analysis of what it is that a human computer is doing when carrying out a computational task.

The paper is an incredible achievement. He accomplishes so much: he defines and explains the machines; he proves that there is a universal Turing machine; he shows that there can be no computable procedure for determining the validities of a sufficiently powerful formal proof system; he shows that the halting problem is not computably decidable; he argues that his machine concept captures our intuitive notion of computability; and he develops the theory of computable real numbers.

What I was extremely surprised to find, however, and what I want to tell you about today, is that despite the title of the article, Turing adopts an incorrect approach to the theory of computable numbers. His central definition is what is now usually regarded as a mistaken way to proceed with this concept.

Let me explain. Turing defines that a computable real number is one whose decimal (or binary) expansion can be enumerated by a finite procedure, by what we now call a Turing machine. You can see this in the very first sentence of his paper, and he elaborates on and confirms this definition in detail later on in the paper.

He subsequently develops the theory of computable functions of computable real numbers, where one considers computable functions defined on these computable numbers. The computable functions are defined not on the reals themselves, however, but on the programs that enumerate the digits of those reals. Thus, for the role they play in Turing’s theory, a computable real number is not actually regarded as a real number as such, but as a program for enumerating the digits of a real number. In other words, to have a computable real number in Turing’s theory is to have a program for enumerating the digits of a real number. And it is this aspect of Turing’s conception of computable real numbers where his approach becomes problematic.

One specific problem with Turing’s approach is that on this account, it turns out that the operations of addition and multiplication for computable real numbers are not computable operations. Of course this is not what we want.

The basic mathematical fact in play is that the digits of a sum of two real numbers

To see this, consider the following sum

If you add up the numbers digit-wise, you get

The problem, I claim, is that we cannot assign the digits of

In detail, suppose that we have committed to the idea that the initial digits of

Therefore, there is no algorithm to compute the digits of

One can make similar examples showing that multiplication and many other very simple functions are not computable, if one insists that a computable number is an algorithm enumerating the digits of the number.

So what is the right definition of computable number? Turing was right that in working with computable real numbers, we want to be working with the programs that compute them, rather than the reals themselves somehow. What is needed is a better way of saying that a given program computes a given real.

The right definition, widely used today, is that we want an algorithm not to compute exactly the digits of the number, but rather, to compute approximations to the number, as close as desired, with a known degree of accuracy. One can define a computable real number as a computable sequence of rational numbers, such that the

But let me clear up a confusing point. Although I have claimed that Turing’s original definition of computable real number is incorrect, and I have explained how we usually define this concept today, the mathematical fact is that a real number

Let me quickly prove this. If a real number

So there is something of a philosophical problem here. The issue isn’t that Turing has misidentified particular reals as being computable or non-computable or has somehow got the computable reals wrong extensionally as a subset of the real numbers, since every particular real number has Turing’s kind of representation if and only if it has the approximation kind of representation. Rather, the problem is that because we want to deal with computable real numbers by working with the programs that represent them, Turing’s approach means that we cannot regard addition as a computable function on the computable reals. There is no computable procedure that when given two programs for enumerating the digits of real numbers

This is the third in a series of posts I’ve made recently concerning what I call the universal algorithm, which is a program that can in principle compute any function, if only you should run it in the right universe. Earlier, I had presented a few elementary proofs of this surprising theorem: see Every function can be computable! and A program that accepts exactly any desired finite set, in the right universe.

Woodin’s proof, however, is a little more involved than the simple arguments I provided for the universal algorithm alone. Please see the paper Blanck, R., and Enayat, A. Marginalia on a theorem of Woodin, The Journal of Symbolic Logic, 82(1), 359-374, 2017. doi:10.1017/jsl.2016.8 for a further discussion of Woodin’s argument and related results.

What I’ve recently discovered, however, is that in fact one can prove Woodin’s stronger version of the theorem using only the method of the elementary argument. This variation also allows one to drop the countability requirement on the models, as was done by Blanck and Enayat. My thinking on this argument was greatly influenced by a comment of Vadim Kosoy on my original post.

It will be convenient to adopt an enumeration model of Turing computability, by which we view a Turing machine program as providing a means to computably enumerate a list of numbers. We start the program running, and it generates a list of numbers, possibly finite, possibly infinite, possibly empty, possibly with repetition. This way of using Turing machines is fully Turing equivalent to the usual way, if one simply imagines enumerating input/output pairs so as to code any given computable partial function.

Theorem.(Woodin) There is a Turing machine program

It is statement (3) that makes this theorem stronger than merely the universal algorithm that I mentioned in my earlier posts and which I find particularly to invite philosophical speculation on the provisional nature of finiteness. After all, if in one universe the program

Proof. This is the new elementary proof. Let’s begin by recalling the earlier proof of the universal algorithm, for statements (1) and (2) only. Namely, let

(The reader may notice an apparent circularity in the definition of program

It is clear that the program

Let me now modify the program in order to achieve the key third property. Note that the program described above definitely does not have property (3), since once a nonempty sequence

Specfically, for the new modified version of the program

Succinctly: the program

We can still prove in

Again, you cannot refute that any particular finite sequence

So again, precisely because you cannot refute these statements, it follows that it is consistent with

Finally, for statement (3), suppose that

If alternatively

Notice that

Precisely because the model

Corollary. Let

Proof. (1) Fix

(2) If

![By photophilde (One small step) [CC BY-SA 2.0 (http://creativecommons.org/licenses/by-sa/2.0)], via Wikimedia Commons One_small_step_(3598325560)](http://jdh.hamkins.org/wp-content/uploads/2017/05/One_small_step_3598325560-300x200.jpg) Last year I made a post about the universal program, a Turing machine program

Last year I made a post about the universal program, a Turing machine program

This theorem is related to a very interesting theorem of W. Hugh Woodin’s, which says that there is a program

Victoria Gitman gave a very nice talk today on both of these theorems at the special session on Computability theory: Pushing the Boundaries at the AMS sectional meeting here in New York, which happens to be meeting right here in my east midtown neighborhood, a few blocks from my home.

What I realized this morning, while walking over to Vika’s talk, is that there is a very simple proof of the version of Woodin’s theorem stated above. The idea is closely related to an idea of Vadim Kosoy mentioned in my post last year. In hindsight, I see now that this idea is also essentially present in Woodin’s proof of his theorem, and indeed, I find it probable that Woodin had actually begun with this idea and then modified it in order to get the stronger version of his result that I shall discuss below.

But in the meantime, let me present the simple argument, since I find it to be very clear and the result still very surprising.

Theorem. There is a Turing machine program

Proof. The program

In short, the program

Clearly,

But meanwhile, assuming

Since you cannot refute any particular finite set as the accepting set for

Statement (3) now follows by a simple compactness argument. Namely, for any

One uses the Kleene recursion theorem to show the existence of the program

This theorem immediately implies the classical result of Mostowski and Kripke that there is an independent family of

The theorem also implies a strengthening of the universal program theorem that I proved last year. Indeed, the two theorems can be realized with the same program!

Theorem. There is a Turing machine program

Proof. The proof of statements (1) and (2) is just as in the earlier theorem. It is clear that

Woodin’s proof is more difficult than the arguments I have presented, but I realize now that this extra difficulty is because he is proving an extremely interesting and stronger form of the theorem, as follows.

Theorem. (Woodin) There is a Turing machine program

This is a much more subtle claim, as well as philosophically interesting for the reasons that he dwells on.

The program I described above definitely does not achieve this stronger property, since my program

Let me tell you about a fascinating paradox arising in certain infinitary two-player games of perfect information. The paradox, namely, is that there are games for which our judgement of who has a winning strategy or not depends on whether we insist that the players play according to a deterministic computable procedure. In the the space of computable play for these games, one player has a winning strategy, but in the full space of all legal play, the other player can ensure a win.

Let me tell you about a fascinating paradox arising in certain infinitary two-player games of perfect information. The paradox, namely, is that there are games for which our judgement of who has a winning strategy or not depends on whether we insist that the players play according to a deterministic computable procedure. In the the space of computable play for these games, one player has a winning strategy, but in the full space of all legal play, the other player can ensure a win.

The fundamental theorem of finite games, proved in 1913 by Zermelo, is the assertion that in every finite two-player game of perfect information — finite in the sense that every play of the game ends in finitely many moves — one of the players has a winning strategy. This is generalized to the case of open games, games where every win for one of the players occurs at a finite stage, by the Gale-Stewart theorem 1953, which asserts that in every open game, one of the players has a winning strategy. Both of these theorems are easily adapted to the case of games with draws, where the conclusion is that one of the players has a winning strategy or both players have draw-or-better strategies.

Let us consider games with a computable game tree, so that we can compute whether or not a move is legal. Let us say that such a game is computably paradoxical, if our judgement of who has a winning strategy depends on whether we restrict to computable play or not. So for example, perhaps one player has a winning strategy in the space of all legal play, but the other player has a computable strategy defeating all computable strategies of the opponent. Or perhaps one player has a draw-or-better strategy in the space of all play, but the other player has a computable strategy defeating computable play.

Examples of paradoxical games occur in infinite chess. We described such a paradoxical position in my paper Transfinite games values in infinite chess by giving a computable infinite chess position with the property that both players had drawing strategies in the space of all possible legal play, but in the space of computable play, then white had a computable strategy defeating any particular computable strategy for black.

For a related non-chess example, let

For another example, suppose that we have a computable linear order

There are several proofs of open determinacy (and see my MathOverflow post outlining four different proofs of the fundamental theorem of finite games), but one of my favorite proofs of open determinacy uses the concept of transfinite game values, assigning an ordinal to some of the positions in the game tree. Suppose we have an open game between Alice and Bob, where the game is open for Alice. The ordinal values we define for positions in the game tree will measure in a sense the distance Alice is away from winning. Namely, her already-won positions have value

What is the computable analogue of the ordinal-game-value analysis in the computably paradoxical games? If a game is open for Alice and she has a computable strategy defeating all computable opposing strategies for Bob, but Bob has a non-computable winning strategy, then it cannot be that we can somehow assign computable ordinals to the positions for Alice and have her play the value-reducing strategy, since if those values were actual ordinals, then this would be a full honest winning strategy, even against non-computable play.

Nevertheless, I claim that the ordinal-game-value analysis does admit a computable analogue, in the following theorem. This came out of a discussion I had recently with Noah Schweber during his recent visit to the CUNY Graduate Center and Russell Miller. Let us define that a computable open game is an open game whose game tree is computable, so that we can tell whether a given move is legal from a given position (this is a bit weaker than being able to compute the entire set of possible moves from a position, even when this is finite). And let us define that an effective ordinal is a computable relation

Theorem. The following are equivalent for any computable game, open for White.

Proof. (

(

Corollary. A computable open game is computably paradoxical if and only if it admits an effective game value assignment for the open player, but only with computable pseudo-ordinals and not with computable ordinals.

Proof. If there is an effective game value assignment for the open player, then the value-reducing strategy arising from that assignment is a computable strategy defeating any computable strategy for the opponent. Conversely, if the game is paradoxical, there can be no such ordinal-assignment where the values are actually well-ordered, or else that strategy would work against all play by the opponent. QED

Let me make a few additional observations about these paradoxical games.

Theorem. In any open game, if the closed player has a strategy defeating all computable opposing strategies, then in fact this is a winning strategy also against non-computable play.

Proof. If the closed player has a strategy

Corollary. If an open game is computably paradoxical, it must be the open player who wins in the space of computable play and the closed player who wins in the space of all play.

Proof. The theorem shows that if the closed player wins in the space of computable play, then that player in fact wins in the space of all play. QED

Corollary. There are no computably paradoxical clopen games.

Proof. If the game is clopen, then both players are closed, but we just argued that any computable strategy for a closed player winning against all computable play is also winning against all play. QED

I’d like to share a simple proof I’ve discovered recently of a surprising fact: there is a universal algorithm, capable of computing any given function!

Wait, what? What on earth do I mean? Can’t we prove that some functions are not computable? Yes, of course.

What I mean is that there is a universal algorithm, a Turing machine program capable of computing any desired function, if only one should run the program in the right universe. There is a Turing machine program

Theorem There is a Turing machine program

The proof is elementary, relying essentially only on the ideas of the classical proof of the Gödel-Rosser theorem. To briefly review, for any computably axiomatized theory

The first statement is the essential assertion of the Gödel-Rosser theorem, and it is easy to prove: if

Let’s now proceed to the proof of the theorem. To begin, we construct what I call the Rosser tree over a c.e. theory

Each theory

I shall now describe a universal algorithm for the case of computing binary functions. Consider the Rosser tree over the theory

If

Basically, the theory

Let me now explain how to extend the result to handle all functions

Finally, let me describe how to extend the result to work with models of set theory, rather than models of arithmetic. Suppose that

One can also get another kind of universality. Namely, there is a program

Let me now also discuss another form of universality.

Corollary

There is a program

Proof

We simply apply the main theorem inside

QED

This last application has a clear affinity with a theorem of Woodin’s, recently extended by Rasmus Blanck and Ali Enayat. See Victoria Gitman’s posts about her seminar talk on those theorems: Computable processes can produce arbitrary outputs in nonstandard models, continuation.

Alternative proof. Here is an alternative elegant proof of the theorem based on the comments below of Vadim Kosoy. Let

Since the function

I claim that the theory

For any function

Finally, note that in any model of

I shall be very pleased to speak at the colloquium and workshop Infinity, computability, and metamathematics, celebrating the 60th birthdays of Peter Koepke and Philip Welch, held at the Hausdorff Center for Mathematics May 23-25, 2014 at the Universität Bonn. My talk will be the Friday colloquium talk, for a general mathematical audience.

I shall be very pleased to speak at the colloquium and workshop Infinity, computability, and metamathematics, celebrating the 60th birthdays of Peter Koepke and Philip Welch, held at the Hausdorff Center for Mathematics May 23-25, 2014 at the Universität Bonn. My talk will be the Friday colloquium talk, for a general mathematical audience.

Abstract. I shall give a general introduction to the theory of infinite games, using infinite chess—chess played on an infinite edgeless chessboard—as a central example. Since chess, when won, is won at a finite stage of play, infinite chess is an example of what is known technically as an open game, and such games admit the theory of transfinite ordinal game values. I shall exhibit several interesting positions in infinite chess with very high transfinite game values. The precise value of the omega one of chess is an open mathematical question.

Slides | Schedule | Transfinite game values in infinite chess | The mate-in-n problem of infinite chess is decidable

This will be a talk for the Dartmouth Mathematics Colloquium on January 23rd, 2014.

This will be a talk for the Dartmouth Mathematics Colloquium on January 23rd, 2014.

Abstract. Using infinite chess as a central example—chess played on an infinite edgeless board—I shall give a general introduction to the theory of infinite games. Infinite chess is an example of what is called an open game, a potentially infinite game which when won is won at a finite stage of play, and every open game admits the theory of transfinite ordinal game values. These values provide a measure of the distance remaining to an actual victory, and when they are known, the game values provide a canonical winning strategy for the winning player. I shall exhibit

several interesting positions in infinite chess with high transfinite game values. The precise value of the omega one of chess, however, the supremum of all such ordinal game values, is an open mathematical question; in the case of infinite three-dimensional chess, meanwhile, Evans and I have proved that every countable ordinal arises as a game value. Infinite chess also illustrates an interesting engagement with computability issues. For example, there are computable infinite positions in infinite chess that are winning for white, provided that the players play according to a computable procedure of their own choosing, but which is no longer winning for white when non-computable play is allowed. Also, the mate-in-n problem for finite positions in infinite chess is computably decidable (joint work with Schlicht, Brumleve and myself), despite the high quantifier complexity of any straightforward representation of it. The talk will be generally accessible for mathematicians, particularly those with at least rudimentary knowledge of ordinals and of chess.

Poster | Slides (8mb) | Transfinite game values in infinite chess | The mate-in-n problem of infinite chess is decidable

Students in my Infinitary Computability course will give talks on their term papers. Talks will be held at the CUNY Graduate Center, Room 3307, 9:30-11:30.

Monday, December 3rd

Monday, December 10th

This will be a contributed talk at the Turing Centenary Conference CiE 2012 held June 18-23, 2012 in Cambridge, UK.

This will be a contributed talk at the Turing Centenary Conference CiE 2012 held June 18-23, 2012 in Cambridge, UK.

Abstract. The mate-in-

This will be a talk at The Incomputable, a workshop held June 12-15, 2012 at the Kavli Royal Society International Centre at Chicheley Hall as a part of the program Semantics and Syntax: A Legacy of Alan Turing organized by the Isaac Newton Institute of Mathematical Sciences in Cambridge, UK.

Abstract. I will speak on the computable analogue of the theory of equivalence relations on the reals under Borel reducibility. The Borel theory has been enormously successful in clarifying the hierarchy of classification problems arising throughout mathematics. The computable analogue, meanwhile, appears to be particularly well-suited for an analysis of the c.e. instances of those problems, a rich context with many natural examples, such as the isomorphism relation on c.e. graphs or on computably presented groups. In particular, every equivalence relation on countable structures has its natural restriction to the c.e. instances, which may be viewed as an equivalence relation on the indices of those c.e. sets. Specifically, one equivalence relation E on the natural numbers is computably reducible to another F, if there is a computable function f such that n E m if and only if f(n) F f(m). This reduction notion has been introduced and studied independently by several different researchers and research groups. In this talk, I will describe recent joint work with Sam Coskey and Russell Miller that fills out parts of the hierarchy. An abundance of questions remain open.

introduced and studied independently by several different researchers and research groups. In this talk, I will describe recent joint work with Sam Coskey and Russell Miller that fills out parts of the hierarchy. An abundance of questions remain open.

This will be a talk on May 18, 2012 for the CUNY Set Theory Seminar.

Infinite chess is chess played on an infinite edgeless chessboard. The familiar chess pieces move about according to their usual chess rules, and each player strives to place the opposing king into checkmate. The mate-in-

article | slides

In Fall 2012 I will teach a graduate course on infinitary computability theory in the Computer Science program at the CUNY Graduate Center. This course will be aimed at graduate students in computer science and mathematics who are interested in infinitary computational processes.

Infinitary computability, CSC 85020, Mondays, 9:30 – 11:30 am

CS course listings | this listing

This course will explore all the various infinitary theories of computability, including infinite time Turing machines, Blum-Shub-Smale computability, Büchi automata, ordinal register machines and others. The focus will be on introducing the computability models and comparing them to each other and to standard concepts of computability. In the early part of the course, we shall review the standard finitary computational models, before investigating the infinitary supertask analogues.

Students wishing to prepare for the course should review their understanding of Turing machines and the other theoretical machine models of computation.

Some of my articles on infinitary computability | Student talks on their infinitary computability term papers