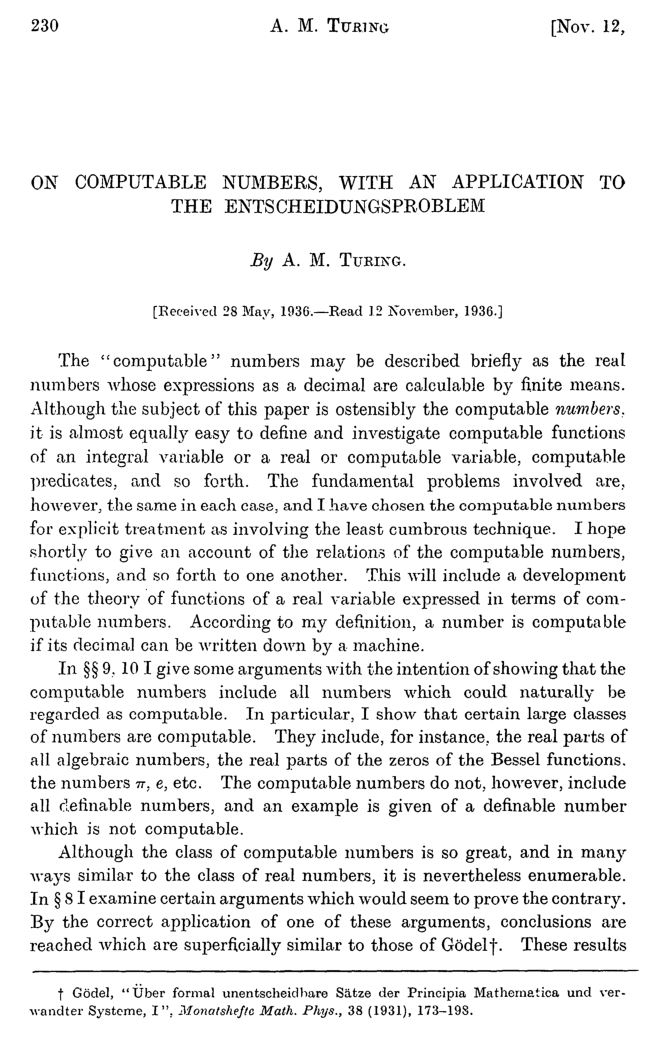

I have been reading Alan Turing’s paper, On computable numbers, with an application to the entsheidungsproblem, an amazing classic, written by Turing while he was a student in Cambridge. This is the paper in which Turing introduces and defines his Turing machine concept, deriving it from a philosophical analysis of what it is that a human computer is doing when carrying out a computational task.

The paper is an incredible achievement. He accomplishes so much: he defines and explains the machines; he proves that there is a universal Turing machine; he shows that there can be no computable procedure for determining the validities of a sufficiently powerful formal proof system; he shows that the halting problem is not computably decidable; he argues that his machine concept captures our intuitive notion of computability; and he develops the theory of computable real numbers.

What I was extremely surprised to find, however, and what I want to tell you about today, is that despite the title of the article, Turing adopts an incorrect approach to the theory of computable numbers. His central definition is what is now usually regarded as a mistaken way to proceed with this concept.

Let me explain. Turing defines that a computable real number is one whose decimal (or binary) expansion can be enumerated by a finite procedure, by what we now call a Turing machine. You can see this in the very first sentence of his paper, and he elaborates on and confirms this definition in detail later on in the paper.

He subsequently develops the theory of computable functions of computable real numbers, where one considers computable functions defined on these computable numbers. The computable functions are defined not on the reals themselves, however, but on the programs that enumerate the digits of those reals. Thus, for the role they play in Turing’s theory, a computable real number is not actually regarded as a real number as such, but as a program for enumerating the digits of a real number. In other words, to have a computable real number in Turing’s theory is to have a program for enumerating the digits of a real number. And it is this aspect of Turing’s conception of computable real numbers where his approach becomes problematic.

One specific problem with Turing’s approach is that on this account, it turns out that the operations of addition and multiplication for computable real numbers are not computable operations. Of course this is not what we want.

The basic mathematical fact in play is that the digits of a sum of two real numbers

To see this, consider the following sum

If you add up the numbers digit-wise, you get

The problem, I claim, is that we cannot assign the digits of

In detail, suppose that we have committed to the idea that the initial digits of

Therefore, there is no algorithm to compute the digits of

One can make similar examples showing that multiplication and many other very simple functions are not computable, if one insists that a computable number is an algorithm enumerating the digits of the number.

So what is the right definition of computable number? Turing was right that in working with computable real numbers, we want to be working with the programs that compute them, rather than the reals themselves somehow. What is needed is a better way of saying that a given program computes a given real.

The right definition, widely used today, is that we want an algorithm not to compute exactly the digits of the number, but rather, to compute approximations to the number, as close as desired, with a known degree of accuracy. One can define a computable real number as a computable sequence of rational numbers, such that the

But let me clear up a confusing point. Although I have claimed that Turing’s original definition of computable real number is incorrect, and I have explained how we usually define this concept today, the mathematical fact is that a real number

Let me quickly prove this. If a real number

So there is something of a philosophical problem here. The issue isn’t that Turing has misidentified particular reals as being computable or non-computable or has somehow got the computable reals wrong extensionally as a subset of the real numbers, since every particular real number has Turing’s kind of representation if and only if it has the approximation kind of representation. Rather, the problem is that because we want to deal with computable real numbers by working with the programs that represent them, Turing’s approach means that we cannot regard addition as a computable function on the computable reals. There is no computable procedure that when given two programs for enumerating the digits of real numbers