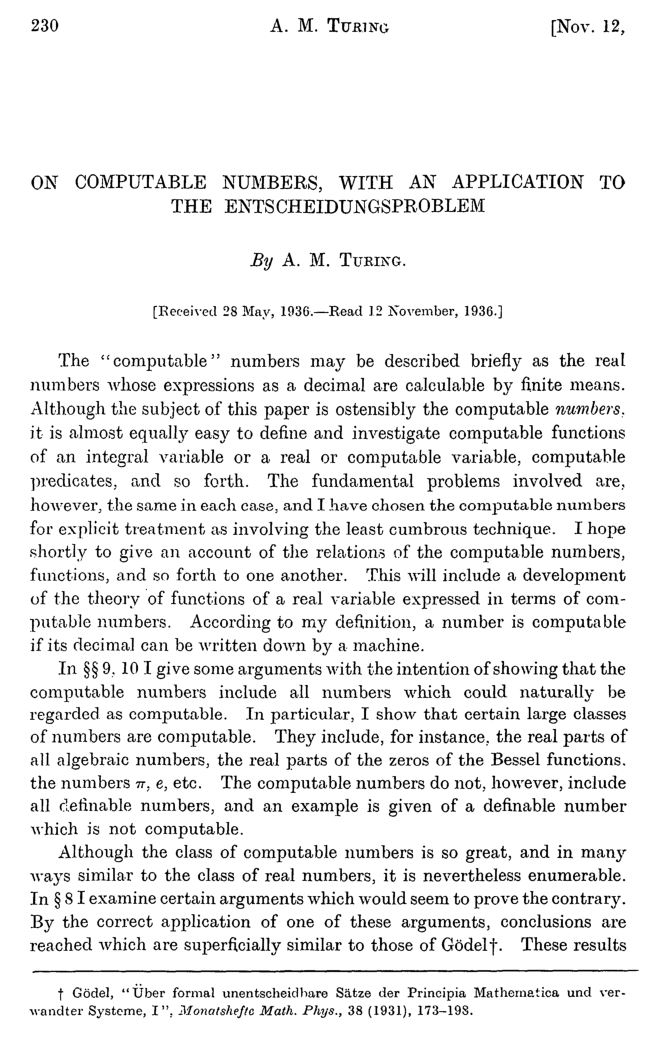

I have been reading Alan Turing’s paper, On computable numbers, with an application to the entsheidungsproblem, an amazing classic, written by Turing while he was a student in Cambridge. This is the paper in which Turing introduces and defines his Turing machine concept, deriving it from a philosophical analysis of what it is that a human computer is doing when carrying out a computational task.

The paper is an incredible achievement. He accomplishes so much: he defines and explains the machines; he proves that there is a universal Turing machine; he shows that there can be no computable procedure for determining the validities of a sufficiently powerful formal proof system; he shows that the halting problem is not computably decidable; he argues that his machine concept captures our intuitive notion of computability; and he develops the theory of computable real numbers.

What I was extremely surprised to find, however, and what I want to tell you about today, is that despite the title of the article, Turing adopts an incorrect approach to the theory of computable numbers. His central definition is what is now usually regarded as a mistaken way to proceed with this concept.

Let me explain. Turing defines that a computable real number is one whose decimal (or binary) expansion can be enumerated by a finite procedure, by what we now call a Turing machine. You can see this in the very first sentence of his paper, and he elaborates on and confirms this definition in detail later on in the paper.

He subsequently develops the theory of computable functions of computable real numbers, where one considers computable functions defined on these computable numbers. The computable functions are defined not on the reals themselves, however, but on the programs that enumerate the digits of those reals. Thus, for the role they play in Turing’s theory, a computable real number is not actually regarded as a real number as such, but as a program for enumerating the digits of a real number. In other words, to have a computable real number in Turing’s theory is to have a program for enumerating the digits of a real number. And it is this aspect of Turing’s conception of computable real numbers where his approach becomes problematic.

One specific problem with Turing’s approach is that on this account, it turns out that the operations of addition and multiplication for computable real numbers are not computable operations. Of course this is not what we want.

The basic mathematical fact in play is that the digits of a sum of two real numbers

To see this, consider the following sum

If you add up the numbers digit-wise, you get

The problem, I claim, is that we cannot assign the digits of

In detail, suppose that we have committed to the idea that the initial digits of

Therefore, there is no algorithm to compute the digits of

One can make similar examples showing that multiplication and many other very simple functions are not computable, if one insists that a computable number is an algorithm enumerating the digits of the number.

So what is the right definition of computable number? Turing was right that in working with computable real numbers, we want to be working with the programs that compute them, rather than the reals themselves somehow. What is needed is a better way of saying that a given program computes a given real.

The right definition, widely used today, is that we want an algorithm not to compute exactly the digits of the number, but rather, to compute approximations to the number, as close as desired, with a known degree of accuracy. One can define a computable real number as a computable sequence of rational numbers, such that the

But let me clear up a confusing point. Although I have claimed that Turing’s original definition of computable real number is incorrect, and I have explained how we usually define this concept today, the mathematical fact is that a real number

Let me quickly prove this. If a real number

So there is something of a philosophical problem here. The issue isn’t that Turing has misidentified particular reals as being computable or non-computable or has somehow got the computable reals wrong extensionally as a subset of the real numbers, since every particular real number has Turing’s kind of representation if and only if it has the approximation kind of representation. Rather, the problem is that because we want to deal with computable real numbers by working with the programs that represent them, Turing’s approach means that we cannot regard addition as a computable function on the computable reals. There is no computable procedure that when given two programs for enumerating the digits of real numbers

Why can Turing not just use a radix with

Rational numbers are no problem for Turing or anyone. The interesting case of computable numbers is with irrational numbers, even transcendental numbers. Trying to adopt a variant representation for rational numbers will not avoid the main difficulty my post is about, since you cannot tell from finitely much information of

It is not clear to me that Turing’s definition/explication of computable real number can be said to be wrong, since it is extensionally correct. It seems to be rather his definition of “computable function from the reals to the reals” that is overly restrictive and therefore inadequate to explain the intuitive concept. On his definition, if I understand it well, a function is computable if there is a computable function that maps presentations of the arguments in Turing’s sense (program enumerating the digits of the arguments) to a presentation of the value in Turing’s sense (a program enumerating the digits of the value). That’s a small subclass of (so, not coextensional to) the computable functions according to the modern definition. So I do not see a philosophical problem after all.

I don’t agree fully with this comment, and I still think it is correct to say that Turing has the wrong definition.

The reason is that we do not ultimately think of a computable real number as a particular kind of real number, and despite his initial definition, neither does Turing. When it comes to defining operations on the computable real numbers, which is what Turing is aiming at, he explicitly abandons the idea that these are defined on the real numbers themselves or some of them. Rather, operations on the computable real numbers are functions defined on the programs that generate such reals. So a computable real number, for Turing as well as for the contemporary theory, is a program for generating a real number.

And if one adopts Turing’s view that the programs we should be looking at are the programs that generate the successive digits of the real number, then this is precisely where he goes wrong, since in this case, the ordinary operations of addition and multiplication will not be computable, for the reasons I explained in the post.

Turing seems in his article to assume that they would be computable, and much more, since he is using the tangent function and infinite sums and so on.

The philosophical question here as I see it is: what does it mean to have a computable real number? Does it mean to have a particular real number? No, it means to have an algorithm that is capable of describing a real number.

Thank you for posting this very interesting piece; it is a fascinating topic and your explanation of it was (as usual) very clear and accessible. I think it is a philosophically interesting fact that two definitions can be extensionally equivalent but not equally ‘correct’ or ‘good’ mathematically speaking. There might be many different kinds of reason why one of a pair of equivalent definitions is preferable to the other. One might be more explanatory, or better support generalisations, or provide a better foundation for a certain kind of theory and so on; it would be interesting to consider further examples and try to classify them.

As a footnote, I think it’s worth mentioning that Turing himself came to realise that the binary digit representation of real numbers he used in ‘On Computable Numbers’ was problematic. He discusses this in ‘On Computable Numbers, with an Application to the Entscheidungsproblem. A Correction’ (Proc. London Math. Soc. 43 (1937), 544-6; also reprinted in Jack Copeland (ed) ‘The essential Turing’ pp. 94-96).

Turing’s discussion of the problem (and his solution to it) in this paper is very brief and somewhat cryptic. There is an excellent, detailed discussion of it in Guido Gherardi, ‘Alan Turing and the Foundations of Computable Analysis’ , Bulletin of Symbolic Logic 17(3) 2011, 394-430.

In the ‘correction’ article, Turing does not discuss the problem you described, that the basic operations on real numbers cannot be represented as computable functions when the binary digit representation is used. Instead he discusses the following problem: although we might have a program P that computes a sequence of rational approximations to a real number (which we can prove must be computable) there is no program which takes as input programs like P and produces as output a program that enumerates the binary digits of

(which we can prove must be computable) there is no program which takes as input programs like P and produces as output a program that enumerates the binary digits of  . There must *be* some program that enumerates the digits of

. There must *be* some program that enumerates the digits of  but there’s no general way of constructing it from a program that computes the sequence of rational approximations. (So the situation seems at least analogous to the problem that there is no program to compute the digits of a + b from programs that enumerate the digits of a and of b).

but there’s no general way of constructing it from a program that computes the sequence of rational approximations. (So the situation seems at least analogous to the problem that there is no program to compute the digits of a + b from programs that enumerate the digits of a and of b).

Turing writes “This disagreeable situation can be avoided by modifying the manner in which computable numbers are associated with computable sequences, the totality of computable numbers being left unaltered.” In other words, he offers an alternative definition of ‘computable number’ which avoids this problem but which is equivalent to the orginal definition in the sense that a real number is computable in the original sense if and only if it is computable in this new sense – just as described in your piece. The alternative definition he suggests is not, I believe, the same as any currently standard definition, but is equally workable. He considers sequences of 0s and 1s of the form:

and associates with each such sequence the real number

So for example the sequence 10 1 corresponds to 2/3, 10 0 corresponds to -2/3, 1110 01 corresponds to 2 + 4/9, 10 11111… corresponds to 2/3 + 4/9 + 8/27 + … = 2 and so on.

A Turing machine that prints a sequence of 1s and 0s of this form is then said to compute the corresponding real number; a real number is computable if there is a machine that computes it. Turing cites Brouwer as the source for “this use of overlapping intervals for the definition of real numbers”. (See Gherardi section 3.3 for discussion of this).

Turing states (but does not prove) that this new definition solves the problem he discussed. Gherardi proves (section 3.4) that Turing’s representation is *computably equivalent* to one of the current standard representations (the Cauchy representation) and so it also solves the problem described in your piece – the standard operations of addition, multiplication and so on are now computable functions.

Thank you very much for this knowledgeable and informative comment.

Just a small comment to add: the fact that there is no algorithm A that takes an algorithm P computing rational approximations to a real number x and spits out a program A(P)=P’ that outputs the digits of x is not merely analogous to the issue that addition is not computable in the digit representation – it is actually a consequence thereof.

For if there were an algorithm A as above, then there would be an algorithm for computing the digits of x+y from the digits of x and the digits of y. Namely: suppose Q,R are algorithms outputting the digits of x (resp., digits of y). Then, as in the original post, these can be converted (algorithmically) into algorithms computing rational approximations of x and y, respectively. The sum of such rational-approximation algorithms produces a rational-approximation algorithm P for x+y. And then A(P) would be a program outputting the digits of x+y.

I think that it is the idea that there are definitions of a term by a different coextensional one that are not *correct* that I was at pains to deny. There are of course interesting questions about how a certain notion and theory are best formalised/analysed when we are not sure at the outset about what sort of entities we want to talk about (reals or programs?). What I got wrong, as Prof. Hamkins pointed out, is that I assumed that the definition had to single out a class of real numbers. But then, on Turing’s initial definition and on the modern one define, the computable numbers are two different, in fact disjoint classes of programs. They are not coextensional at all. Therefore, again, this does not provide an example of two definitions that are extensionally equivalent but of which one is incorrect.

Yes, I think we basically agree. I suppose one can say that I have over-stated the case to a certain extent, since it isn’t actually completely clear from Turing’s article whether he thought of it just like this. After all, at least on the surface, he does define a computable real number as a kind of real number. But then when one gets into how one might use these, the real number gets replaced by the program.

So in my treatment of this issue in the book I am currently writing on the philosophy of mathematics, I take a somewhat softer tone, while making essentially the same points.

I find these issues quite subtle and interesting, for both mathematical and philosophical reasons.

Just wanted to put up on the site the concerns I had mentioned in our email exchange.

I think it is incorrect to say that +(a, b) as a function on computable numbers is itself noncomputable in Turing’s original definition. This isn’t to say I disagree with the proof presented, which I believe correctly shows that a digit adder cannot produce digits finitely. I think that’s an important point to share about the nature of digits, and something that should be generally discussed when considering digit representations and the use of digit-based algorithms – I just don’t think it addresses computability or what Turing discusses in his paper.

My concern is that I think a type error is happening. I think there are two distinct object types being discussed here:

Function Types

\alpha: N \to {Digit + Placeholder}

Objects of this type do the actual work of converting a request for a “place” as input to the output of the corresponding digit (or decimal point, for completeness and to not complicate the interface more). Generally, they are input \to output types, and we often describe them through their graph, a subcollection of “input X output”. Identity is done at the level of the input and output spaces, so the graph delimits the individual instances of this type.

Program Specification Types

Specification

Objects of this type are the programs written to perform a specific function. They may be many complex structures (parse trees, state graphs, …) but often they begin just as a finite string type on which a formal grammar has been defined that defines the functionality of the abstract machine which runs the code. Identity, then, is at the level of the string.

Here we see a couple of distinctions:

– The function is described by data that may have infinite information content (it’s graph). The specification, though, is always a finite string.

– There is only a single function for each transformation. The function that doubles integers is a single specific function. There are many programs for any given function, though. There is one that calculates “x + x” and one for “2 * x” and one written just for fun that does “4 * x / 2”.

Why do I think this matters? I think Turing knows the difference between a function and it’s program, and I think he made great effort to keep this distinction clear in his papers. For him, a computable number is a program. It’s important that it represent a finite piece of information – it’s kind of the point for it to be “graspable”.

But in this proof that addition is not computable with Turing’s original definition, I see appeal to the function, not the program. I see it critically relies on the fact that the function cannot give “all of it’s information about the number” in a finite way. And I think this is not the idea that Turing had.

In fact, Turing does clearly define a “computable function” in his paper. I think it makes some things clear. In the paper, he starts with the notion of “satisfactory numbers” – these are the Godel numbers (or “description number”) for computable real programs whose implementation will finitely produce their output (he calls them “circle-free”, as in free of infinite loops). “Satisfactory” is a category with witness (finite retrieval). If n is satisfactory, then M(n) represents the program that n is the Godel number for, so it is a program that represents a computable real between 0 and 1, which he calls \gamma_n. Turing then takes a typically Turingesque approach of turning this into a direct enumeration of the computable reals through a transcendental mapping (tangent taking [0, 1) to [0, oo)), that Turing calls \alpha_n.

Then he looks at functions \phi(n) where satisfactory inputs n give satisfactory outputs. Turing gives this definition of a computable function:

“Then the function defined by f(\alpha_n)=\alpha_(\phi(n)) is a computable function and all computable functions of a computable variable are expressible in this form.”

This descriptive definition through satisfactory numbers, again, is witnessable and makes no appeal to any limits of the representation of the computable real in digit enumeration. Indeed, it is indexed by the Godel numbering of the program specification.

Turing often makes reference to the object domain of representation pretty clearly in his paper:

“A computable sequence \gamma is determined by a description of a machine which computes \gamma”

So what does this matter? How is this related to the issue with a digit adder explored here?

A program for a computable real that calculates digits reveals all of it’s information about the computable real in finite information. But if you try to use the function, finite inputs only give finite outputs. One place where this happens is these two programs:

rep_{1/3}(n) =

{

switch(n)

case 0: return 0;

case 1: return “.”;

else: return 3;

}

rep_{2/3}(n) =

{

switch(n)

case 0: return 0;

case 1: return “.”;

else: return 6;

}

For these programs, we can finitely prove that rep_{1/3} can be represented by the rational (1, 3) (in pair representation), and similarly rep_{2/3} has rational representative (2, 3). We can prove that mechanically. There are proof verification systems that can be trained to that today. This can all be done finitely, using finite information, which I think is one of the big points of computable reals.

And with those rational representations we can prove (1, 3) + (2, 3) = (3, 3) = (1, 1). Exactly. Precisely. In finite steps.

And you cannot do that finitely by consuming outputs to finite inputs of the functional type. You can never consume enough digits to know the rational representation is (1, 3) precisely.

Now, Turing’s definitions of computability are existential, not constructive. This is a big part of the trick of using recursively enumerable sequences – you can know about the existence of certain kind of correspondences between elements in different enumerations without knowing how to construct that correspondence, simply from completeness and accessibility features of the enumerations. We can’t always know the identity through a formal proof, but we still can know the identity exists somewhere. For example, we might know there that some recursive application of a computable function of a computable real converges to a computable real, but if you are looking for a specific functional form for the representation (say as a program that produces binary digits, as in the representation used by Turing), you may not be able to derive what that program would look like.

In fact, the “A Correction” paper where Turing offers a different representation from the binary digit enumeration rep gives this representational problem as the reason (and not any concerns with the computability of addition or of functions generally). The corrections were really a few technical details in some of the proofs of the original paper (all fairly minor), and the representational section is just about this “disagreeable situation” that you may have a representation of a computable real number through upper/lower sequences of rationals that converge to that real (as fast as the binary decimals used throughout the paper – i.e. the distance between same-index elements in the sequence of rationals decreases by at least half each step) and not be able to determine which program represents that in the digit production sense.

In fact, Turing is pointing out the digit ambiguity here. But this isn’t about a computable binary operator like addition and this really isn’t talking about computability at all – very explicitly, Turing points out first in his “A” point of that section that everything is fully computable here. He is talking about provable representation. This section is not about any correction to any claims about computability, it’s just that “disagreeable situation” with extracting the desired kind of representative from a different given one.

Turing’s solution is to use a similar representation to digit expansion, but instead map 0 to -1 and expand as if it were ternary decimals, with a header mapping to peel some digits off. It’s a typical Turingesque solution to separate out the representation and avoid the obstruction. He is making sure the ambiguity of infinite trailing 1s and a lead 1 with infinite trailing 0s is removed by ensuring the two computable sequences actually refer to separate computable reals. He points out, as he does in many of his Turingesque solutions, that the choice is wide open and he is just choosing an example mechanism.

Anyway, to summarize my points:

– Turing’s definition of computable reals as programs that specify a digit enumerator is not a limit on computability. All computable reals under this definition are the same as the computable reals under a definition built on rational approximants, say, or other representations with the right completeness / accessibility properties.

– Turing’s definition of computable functions of computable variables makes no requirements on how the information of the computable variables is used. Indeed, ultimately the program may just be turned into it’s Godelization (an integer) and that used as an increment into any given computable sequence of the Godelization of other programs representing computable numbers.

– So none of this is really about “addition not being computable in Turing’s sense” and that this original representation was a horrible mistake or any of that characterization. I think it is wrong from a sense pedagogical position to claim that, and students who read that will take the wrong lessons from it about computability.

The reason this point is important me is several fold. First, as a computer scientist, I often see a lot of misunderstandings from people new to the field that real numbers only have representatives in some kind of fixed-size floating point representation, and simulations and computations often suffer from stability issues due to this belief. Computer programs have the ability to take many kinds of representations, including special representations of rationals, finite decimal sequences, even symbolic representations that preserve the definition of the number in ways that provide perfect representation. For example, I often do not want to represent “sqrt(2)” as “1.414…” or even as 1, 7/5, 141/100, … but instead find the best representation is “sqrt(2)” so when I want to calculate (sqrt(2))^2 I can know it is 2 exactly. I think one of the big points of computable numbers is to realize that all of the real numbers we actually work with to do computations on have a finite specification. I think people often get lost in the idea that real numbers involve some higher infinity of possibilities

But also, as a constructivist with ultrafinitist tendencies, I generally hold those algorithms that work finitely as being where meaning lies. I take the work of Turing (and Post and Kleene and others here) as pretty foundational to modern schools of constructivism, and look for it’s point to be represented accurately. Constructivists tend to spend a lot of time correcting misconceptions of those who have developed strong platonic intuitions of completed infinities. When we start to blur the line of “what is possible” by appeals to infinities that aren’t inherently a part of the problem space, you can get incorrect conclusions like I believe the statement of the results here shows. You can’t ever multiply the infinite digits of “1.414…” together to get an exact 2, nor can you square the infinite rational approximants to get that result, but if you have the right finite representation, you certainly can, even if that finite representation specifies a function that produces one of those infinite forms.

Anyway, I wanted to write my thoughts down on this for open critique. Although I studied formal langauge theory (cartesian closed categories, domain theory, etc.) in my main focus in school, I am not a professional mathematician and much of my studies are extracurricular. So I may just be a crank, and it’s important to sanitise the ideas here under any scrutiny it may warrant. So putting it on the site as we discussed in email.

Unfortunately, I believe that you haven’t understood my argument, since several remarks in your comment indicate a misunderstanding of the argument via the Kleene recursion theorem. For example, you characterize my argument as using the (infinite) function instead of the program, but this is simply wrong—the argument via the Kleene recursion theorem is at its heart a claim about the programs, showing that there is no computable process that takes two (finite) programs as input and returns a (finite) program that when run, would enumerate the digits of the sum of the real numbers enumerated by the input programs, if indeed the input programs were programs that enumerate the digits of respective real numbers. In fact I explained this point to you and others in several ways in our email exchange, but in reply received only several very long and insistent messages making the same points you make here, but without any indication that you have grasped the central argument you seem to be criticizing. I am sorry to have failed, but I did try…

I do think there is some amount of talking past each other from our different interpretations of what is being discussed. Let me be clear.

I believe your appeal to the Kleene Recursion Theorem is about the following circumstance: you start with the claim that there is an adder +(a, b) that consumes finite _outputs_ from a and b and is able to produce the nth digit of a+b. Then, you show, given this + and a computable real a, there exists a computable real b that will produce a different nth digit than the actual sum of the two computable reals. Your use of the Kleen Recursion Theorem is to assert that existence, no matter the way +(a, b) consumes finite digits that are _output_ by a and b to produce it’s output finitely. So the existence of such an adder is contradicted.

The reason you appeal to this is because the Kleene Recursion theorem is specifically about the existence of programs, not functions, and your use is specifically to show the existence of a computable real program “b” that meets this characterization. So when I talk about programs not functions, it seems like I don’t understand the Kleene Recursion theorem or your point here, and when I mention the infinite information content of the function _outputs_, it feels like I am missing the point that the adder, for it to be a computable function, has to consume only finite _outputs_ for any digit it outputs, not the whole sequence.

Does this feel like the objection your are making? Is this a fair characterization of your frustration with our exchange?

Because I keep challenging the consumption of “outputs” of the computable real programs, and I’ve underlined above in my description where that matters. That is the type error I think is being made. In fact, you say this many times:

“The basic mathematical fact in play is that the digits of a sum of two real numbers is not a continuous function of the digits of a and b separately; in some cases, one cannot say with certainty the initial digits of a+b, knowing only finitely many digits, as many as desired, of a and b.”

“The problem, I claim, is that we cannot assign the digits of a+b in a way that will depend only on finitely many digits each of a and b. The basic problem is that if we inspect only finitely many digits of and , then we cannot be sure whether that pattern will continue, whether there will eventually be a carry or not, and depending on how the digits proceed, the initial digits of can be affected.”

“Therefore, there is no algorithm to compute the digits of a+b continuously from the digits of a and b separately.”

But.. I am saying a computable function doesn’t need to consume any outputs of the programs for the computable reals that are it’s input. There does need to be lamda substitutibility or any plugging in of the functions of a and b into +(a, b). That’s not how Turing defines computable functions of computable variables.

What I keep saying is that your proof relies fundamentally on that “consumption of outputs of the programs” and not “consumption of the programs themselves.” And I’ve given examples, like that in my response above about 1/3 and 2/3 above, that I don’t think you’ve seen. I think this is the fault of my long (long!) walls of text, but I tried to explain on Twitter at one point too, and I may just be fundamentally broken on abbreviating mathematical points.

My problem with your use of the Kleene Recursion theorem is that it is _fundamentally_ built around this idea that +(a,b) outputs digits after “consuming digits from a and b”, because the whole point is to change the pattern of the output to something else “after +(a, b) has output a digit”. But that doesn’t work with 1/3 and 2/3 example at all, where no (none!, 0) digits were consumed to give the output that 1/3 + 2/3 = 1.00000… The programs were consumed, not the digits. There was nothing to change “after a certain number of digits were consumed”. In fact +(a, b) can still be finite and get the answer completely, something no digit consuming adder could ever do. And the result can still be a program that outputs digits – i.e. a computable real according to Turing.

Maybe I really am missing something about your use of the KRT here. I am open to that. But I haven’t seen any engagement at all about the 1/3, 2/3 example I gave, I haven’t seen any acknowledgement of my point about how the programs provide more information, and although I have tried in every communication with you to reframe what you have mentioned to me to see if it matches what you tried to communicate to me (including my summary of what I think the Kleene Recursion argument is above), I haven’t seen the same and I keep being left with the feeling that you aren’t understanding my point about the programs at all. And I fully recognise this is my fault. I am not a great communicator in mathematics, and am not a mathematician by trade.

I think one thing I can do is reach out on Mastodon to see if there are any mathematically minded people who might review this exchange to see if I am indeed misguided and correct me. Even if they can’t explain it to me in a way I understand, if there are others telling me I am wrong I will shrink away in shame and no longer respond. But I would really like to hear from someone who acknowledges my actual example with 1/3 and 2/3 and at least attempts to explain how the Kleene Recursion theorem use isn’t about the consumption of digits by the adder (but instead, say, about all programs structured to output digits, no matter their inputs, or some correction like that).

Unfortunately, your account of my argument via Kleene is not accurate. Let me try to explain it a little more fully. It goes like this: suppose toward contradiction that there were a computable manner of taking as input any two programs

Pingback: Alan Turing submits "On Computable Numbers" for publication - Design History Today

Is there some easy way to prove the inexistence of the adder program using only the Halting Theorem, or is Kleene Recursion Theorem really needed here ?

I eventually learnt that this non-existence (of an adder program) cannot be proven using the Halting problem only (unless we suppose that the program is not allowed to return developments like 0.999… for 1): it is of intermediary difficulty between computability and the Halting problem (the keyword to make it precise is “PA degrees”).

I wrote an article (in french) about this problem: https://lipsum.dev/2025-05-1-impossible-informatique-theorique/