Consider the real numbers $\newcommand\R{\mathbb{R}}\R$ and the complex numbers $\newcommand\C{\mathbb{C}}\C$ and the question of whether these structures are interpretable in one another as fields.

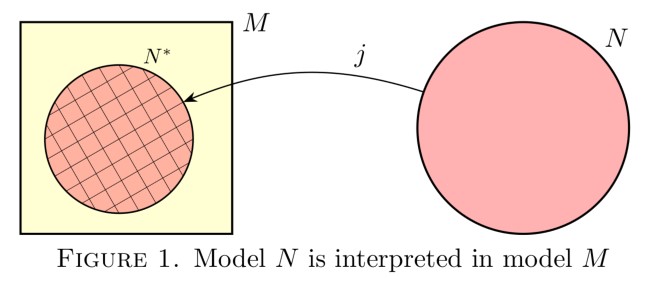

What does it mean to interpret one mathematical structure in another? It means to provide a definable copy of the first structure in the second, by providing a definable domain of $k$-tuples (not necessarily just a domain of points) and definable interpretations of the atomic operations and relations, as well as a definable equivalence relation, a congruence with respect to the operations and relations, such that the first structure is isomorphic to the quotient of this definable structure by that equivalent relation. All these definitions should be expressible in the language of the host structure.

One may proceed recursively to translate any assertion in the language of the interpreted structure into the language of the host structure, thereby enabling a complete discussion of the first structure purely in the language of the second.

For an example, we can define a copy of the integer ring $\langle\mathbb{Z},+,\cdot\rangle$ inside the semi-ring of natural numbers $\langle\mathbb{N},+,\cdot\rangle$ by considering every integer as the equivalence class of a pair of natural numbers $(n,m)$ under the same-difference relation, by which $$(n,m)\equiv(u,v)\iff n-m=u-v\iff n+v=u+m.$$ Integer addition and multiplication can be defined on these pairs, well-defined with respect to same difference, and so we have interpreted the integers in the natural numbers.

Similarly, the rational field $\newcommand\Q{\mathbb{Q}}\Q$ can be interpreted in the integers as the quotient field, whose elements can be thought of as integer pairs $(p,q)$ written more conveniently as fractions $\frac pq$, where $q\neq 0$, considered under the same-ratio relation

$$\frac pq\equiv\frac rs\qquad\iff\qquad ps=rq.$$

The field structure is now easy to define on these pairs by the familiar fractional arithmetic, which is well-defined with respect to that equivalence. Thus, we have provided a definable copy of the rational numbers inside the integers, an interpretation of $\Q$ in $\newcommand\Z{\mathbb{Z}}\Z$.

The complex field $\C$ is of course interpretable in the real field $\R$ by considering the complex number $a+bi$ as represented by the real number pair $(a,b)$, and defining the operations on these pairs in a way that obeys the expected complex arithmetic. $$(a,b)+(c,d) =(a+c,b+d)$$ $$(a,b)\cdot(c,d)=(ac-bd,ad+bc)$$ Thus, we interpret the complex number field $\C$ inside the real field $\R$.

Question. What about an interpretation in the converse direction? Can we interpret $\R$ in $\C$?

Although of course the real numbers can be viewed as a subfield of the complex numbers $$\R\subset\C,$$this by itself doesn’t constitute an interpretation, unless the submodel is definable. And in fact, $\R$ is not a definable subset of $\C$. There is no purely field-theoretic property $\varphi(x)$, expressible in the language of fields, that holds in $\C$ of all and only the real numbers $x$. But more: not only is $\R$ not definable in $\C$ as a subfield, we cannot even define a copy of $\R$ in $\C$ in the language of fields. We cannot interpret $\R$ in $\C$ in the language of fields.

Theorem. As fields, the real numbers $\R$ are not interpretable in the complex numbers $\C$.

We can of course interpret the real numbers $\R$ in a structure slightly expanding $\C$ beyond its field structure. For example, if we consider not merely $\langle\C,+,\cdot\rangle$ but add the conjugation operation $\langle\C,+,\cdot,z\mapsto\bar z\rangle$, then we can identify the reals as the fixed-points of conjugation $z=\bar z$. Or if we add the real-part or imaginary-part operators, making the coordinate structure of the complex plane available, then we can of course define the real numbers in $\C$ as those complex numbers with no imaginary part. The point of the theorem is that in the pure language of fields, we cannot define the real subfield nor can we even define a copy of the real numbers in $\C$ as any kind of definable quotient structure.

The theorem is well-known to model theorists, a standard observation, and model theorists often like to prove it using some sophisticated methods, such as stability theory. The main issue from that point of view is that the order in the real numbers is definable from the real field structure, but the theory of algebraically closed fields is too stable to allow it to define an order like that.

But I would like to give a comparatively elementary proof of the theorem, which doesn’t require knowledge of stability theory. After a conversation this past weekend with Jonathan Pila, Boris Zilber and Alex Wilkie over lunch and coffee breaks at the Robin Gandy conference, here is such an elementary proof, based only on knowledge concerning the enormous number of automorphisms of $\C$, a consequence of the categoricity of the complex field, which itself follows from the fact that algebraically closed fields of a given characteristic are determined by their transcedence degree over their prime subfield. It follows that any two transcendental elements of $\C$ are automorphic images of one another, and indeed, for any element $z\in\C$ any two complex numbers transcendental over $\Q(z)$ are automorphic in $\C$ by an automorphism fixing $z$.

Proof of the theorem. Suppose that we could interpret the real field $\R$ inside the complex field $\C$. So we would define a domain of $k$-tuples $R\subseteq\C^k$ with an equivalence relation $\simeq$ on it, and operations of addition and multiplication on the equivalence classes, such that the real field was isomorphic to the resulting quotient structure $R/\simeq$. There is absolutely no requirement that this structure is a submodel of $\C$ in any sense, although that would of course be allowed if possible. The $+$ and $\times$ of the definable copy of $\R$ in $\C$ might be totally strange new operations defined on those equivalence classes. The definitions altogether may involve finitely many parameters $\vec p=(p_1,\ldots,p_n)$, which we now fix.

As we mentioned, the complex number field $\C$ has an enormous number of automorphisms, and indeed, any two $k$-tuples $\vec x$ and $\vec y$ that exhibit the same algebraic equations over $\Q(\vec p)$ will be automorphic by an automorphism fixing $\vec p$. In particular, this means that there are only countably many isomorphism orbits of the $k$-tuples of $\C$. Since there are uncountably many real numbers, this means that there must be two $\simeq$-inequivalent $k$-tuples in the domain $R$ that are automorphic images in $\C$, by an automorphism $\pi:\C\to\C$ fixing the parameters $\vec p$. Since $\pi$ fixes the parameters of the definition, it will take $R$ to $R$ and it will respect the equivalence relation and the definition of the addition and multiplication on $R/\simeq$. Therefore, $\pi$ will induce an automorphism of the real field $\R$, which will be nontrivial precisely because $\pi$ took an element of one $\simeq$-equivalence class to another.

The proof is now completed by the observation that the real field $\langle\R,+,\cdot\rangle$ is rigid; it has no nontrivial automorphisms. This is because the order is definable (the positive numbers are precisely the nonzero squares) and the individual rational numbers must be fixed by any automorphism and then every real number is determined by its cut in the rationals. So there can be no nontrivial automorphism of $\R$, and we have a contradiction. So $\R$ is not interpretable in $\C$. $\Box$