We use a definite description when we make an assertion about an individual, referring to that individual by means of a property that uniquely picks them out. When I say, “the badly juggling clown in the subway car has a sad expression” I am referring to the clown by describing a property that uniquely determines the individual to whom I refer, namely, the clown that is badly juggling in the subway car, that clown, the one fulfilling this description. Definite descriptions in English typically involve the definite article “the” as a signal that one is picking out a unique object or individual.

If there had been no clown in the subway car, then my description wouldn’t have succeeded—there would have been no referent, no unique individual falling under the description. My reference would similarly have failed if there had been a clown, but no juggling clown, or if there had been a juggling clown, but juggling well instead of badly, or indeed if there had been many juggling clowns, perhaps both in the subway car and on the platform, but all of them juggling very well (or at least the ones in the subway car), for in this case there would have been no badly juggling clown in the subway car. My reference would also have failed, in a different way, if the subway car was packed full of badly juggling clowns, for in this case the description would not have succeeded in picking out just one of them. In each of these failing cases, there seems to be something wrong or insensible with my statement, “the badly juggling clown in the subway car has a sad expression.” What would be the meaning of this assertion if there was no such clown, if for example all the clowns were juggling very well?

Bertrand Russell emphasized that when one makes an assertion involving a definite description like this, then part of what is being asserted is that the definite description has succeeded. According to Russell, when I say, “the book I read last night was fascinating,” then I am asserting first of all that indeed there was a book that I read last night and exactly one such book, and furthermore that this book was fascinating. For Russell, the assertion “the king of France is bald” asserts first, that there is such a person as the king of France and second, that the person fitting that description is bald. Since there is no such person as the king of France, Russell takes the statement to be false.

Iota expressions

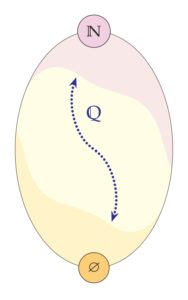

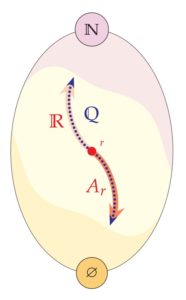

Let us introduce a certain notational formalism, arising in Russell and Whitehead (1910-1913), to assist with our analysis of definite descriptions, namely, the inverted iota notation , which is a term denoting “the for which .” Such a reference succeeds in a model precisely when there is indeed a unique for which holds,

or in other words, when

The value of the term interpreted in is this unique object fulfilling property . The use of iota expressions is perhaps the most meaningful when this property is indeed fulfilled, that is, when the reference succeeds, and we might naturally take them otherwise to be meaningless or undefined, a failed reference.

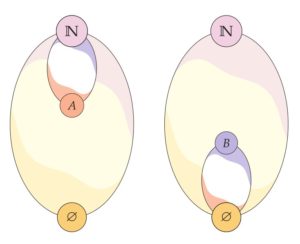

Because the iota expressions are not always meaningful in this way, their treatment in formal logic faces many of the same issues faced by a formal treatment of partial functions, functions that are not necessarily defined on the whole domain of discourse. According to the usual semantics for first-order logic, the interpretation of a function symbol is a function defined on the whole domain of the model—in other words, we interpret functions symbols with total functions.

But partial functions commonly appear throughout mathematics, and we might naturally seek a formal treatment of them in first-order logic. One immediate response to this goal is simply to point out that partial functions are already fruitfully and easily treated in first-order logic by means of their graph relations . One can already express everything one would want to express about a partial function by reference to the graph relation—whether a given point is in the domain of the function and if it is, what the value of the function is at that point and so on. In this sense, first-order logic already has a robust treatment of partial functions.

In light of that response, the dispute here is not about the expressive power of the logic, but is rather entirely about the status of terms in the language, about whether we should allow partial functions to appear as terms. To be sure, mathematicians customarily form term expressions, such as or in the context of the real numbers , which are properly defined only on a subset of the domain of discourse, and in this sense, allowing partial functions as terms can be seen as aligned with mathematical practice.

But the semantics are a surprisingly subtle matter. The main issue is that when a term is not defined it may not be clear what the meaning is of assertions formed using that term. To illustrate the point, suppose that is a term arising from a partial function or from an iota expression that is not universally defined in a model , and suppose that is a unary relation that holds of every individual in the model. Do we want to say that ? Is it true that for every person the elephant they are riding is self-identical? Well, some people are not riding any elephant, and so perhaps we might say, no, that shouldn’t be true, since some values of are not defined, and so this statement should be false. Perhaps someone else suggests that it should be true, because will hold whenever does succeed in its reference—in every case where someone is riding an elephant, it is self-identical. Or perhaps we want to say the whole assertion is meaningless? If we say it is meaningful but false, however, then it would seem we would want to say and consequently also . In other words, in this case we are saying that in that there is some such that makes the always-true predicate false—there is a person, such that the elephant they are riding is not self-identical. That seems weird and probably undesirable, since it only works because must be undefined for this . Furthermore, this situation seems to violate Russell’s injunction that assertions involving a definite description are committed to the success of that reference, for in this case, the truth of the assertion is based entirely on the failure of the reference . Ultimately we shall face such decisions in how to define the semantics in the logic of iota expressions and more generally in the first-order logic of partial functions as terms.

The strong semantics for iota expressions

Let me first describe what I call the strong semantics for the logic of iota expressions. Inspired by Russell’s theory of definite descriptions, we shall define the truth conditions for every assertion in the extended language allowing iota expressions as terms. Notice that itself might already have iota expressions inside it; the formulas of the extended language can be graded in this way by the nesting rank of their iota expressions. The strong semantics I shall describe here also works more generally for the logic of partial functions.

For any model and valuation , we shall define the satisfaction relation and the term valuation recursively. In this logic, the interpretation of terms in a model will be merely partial, not necessarily defined in every case. Nevertheless, for a given iota expression , if we already defined the semantics for , then the term is defined to be the unique individual in , if there is one, for which , where is the value of variable in this valuation; and if there is not a unique individual with this property, then this term is not defined. An atomic assertion of the form is defined to be true in if all the terms are defined in and holds. And similarly an atomic assertion of the form is true in with valuation if and only if and are both defined and they are equal. Notice that if a term expression is not defined, then every atomic assertion it appears in will be false. Thus, in the atomic case we have implemented Russell’s theory of definite descriptions.

We now simply extend the satisfaction relation recursively in the usual way through Boolean connectives and quantifiers. That is, the model satisfies a conjunction just in case it satisfies both of them and ; it satisfies a negation if and only if it fails to satisfy , and so on as usual with the other logical connectives. For quantifiers, if and only if for every individual in , using the valuation assigning value to variable ; and similarly for the existential quantifier.

The strong semantics in effect combine Russell’s treatment of definite descriptions in the case of atomic assertions with Tarski’s disquotational theory to extend the truth conditions recursively to complex assertions. The strong semantics are complete—every assertion or its negation will be true in at any valuation of the free variables, because if is not true in at , then by definition, will be declared true. In particular, exactly one of the sentences will be true, even if they involve definite descriptions that do not refer.

No new expressive power

The principal observation to be made initially about the logic of iota expressions is that they offer no new expressive power to our language. Every assertion that can be made in the language with iota expressions can be made equivalently without them. In short, iota expressions are logically eliminable.

Theorem. Every assertion in the language with iota expressions is logically equivalent in the strong semantics to an assertion in the original language.

Proof. We prove this by induction on formulas. Of course, the claim is already true for all assertions in the original language, and since the strong semantics in the iota expression logic follow the same recursion for Boolean connectives and quantifiers as in the original language, it suffices to remove iota expressions from atomic assertions , where some of the terms involve iota expressions. Consider for simplicity the case of , where is a formula in the original language with no iota expressions. This assertion is equivalent to the assertion that exists, which we expressed above as , together with the assertion that holds of that value, which can be expressed as . The argument is similar if additional functions were applied on top of the iota term or if there were multiple iota terms—one simply says that the overall atomic assertion is true if each of the iota expressions appearing in it is defined and the resulting values fulfill the atomic assertion. By this means, the iota terms can be systematically eliminated from the atomic assertions in which they appear, and therefore every assertion is equivalent to an assertion not using any iota expression.

In light of this theorem, perhaps there is little at stake in the dispute about whether to augment our language with iota expressions, since they add no formal expressive power.

Criticism of the strong semantics

I should like to make several criticisms of the strong semantics concerning how well it fulfills the goal of providing a logic of iota expressions based on Russell’s theory of definite descriptions.

Does not actually fulfill the Russellian theory. We were led to the strong semantics by Russell’s theory of definite descriptions, and many logicians take the strong semantics as a direct implementation of Russell’s theory. But is this right? To my way of thinking, at the very heart of Russell’s theory is the idea that an assertion involving reference by definite description carries an implicit commitment that those references are successful. Let us call this the implicit commitment to reference, and I should like to consider this idea on its own, apart from whatever Russell’s view might have been. My criticism here is that the strong semantics does not actually realize the implicit commitment to reference for all assertions.

It does fulfill the implicit commitment to reference for atomic assertions, to be sure, for we defined that an atomic assertion involving a definite description term is true if and only if the term successfully refers and the referent falls under the predicate . The atomic truth conditions thus implement exactly what the Russellian implicit commitment to reference requires.

But when we extend the semantics through the Tarskian compositional recursion, however, we lose that feature. Namely, if an atomic assertion is false because the definite description term has failed to refer successfully, then the Tarskian recursion declares the biconditional to be true, the biconditional of two false assertions, and and are similarly declared true in this case for the strong semantics. In all three cases, we have a sentence with a failed definite description and yet we have declared it true anyway, violating the implicit commitment to reference.

The tension between Russell and Tarski. The issue reveals an inherent tension between Russell and Tarski, a tension between a fully realized implicit commitment to reference and the compositional theory of truth. Specifically, the examples above show that if we follow the Russellian theory of definite descriptions for atomic assertions and then apply the Tarskian recursion, we will inevitably violate the implicit commitment to reference for some compound assertions. In other words, to require that every true assertion making reference by definite description commits to the success of those references simply does not obey the Tarski recursion. In short, the implicit commitment to reference is not compositional.

Does not respect stipulative definitions. The strong semantics does not respect stipulative definitions in the sense that the truth of an assertion is not always preserved when replacing a defined predicate by its definition.

Consider the ring of integers, for example, and the sentence “the largest prime number is odd.” We could formalize this as an atomic assertion , where is the oddness predicate expressing that is odd and expresses that is a largest prime number. Since there is no largest prime number in the integers, the definite description has failed, and so on the strong semantics the sentence is false in the integer ring.

But suppose that we had previously introduced the oddness predicate by stipulative definition over the ring of integers , in the plain language of rings, defining oddness via . In the original language, therefore the assertion “the largest prime is odd” would be expressed as . Because the iota expression does not succeed, the atomic assertion is false for every particular value of , and so the existential is false, making the negation true. In this formulation, therefore, “the largest prime is odd” is true! So although the sentence was false in the definitional expansion using the atomic oddness predicate, it became true when we replaced that predicate by its definition, and so the strong semantics does not respect the replacement of a stipulatively defined predicate by its definition.

Some philosophical treatments of these kinds of cases focus on ambiguity and scope. One can introduce lambda expressions , for example, expressing property in a manner to be treated as atomic for the purpose of applying the Russellian theory, so that expresses that is defined and holds. In simple cases, these lambda expressions amount to stipulative definitions, while in more sophisticated instances, subtle levels of dependence are expressed when has other free variables not to be treated as atomic in this way by this expression. Nevertheless, the combined lambda/iota expressions are fully eliminable, as every assertion in that expanded language is equivalently expressible in the original language. To my way of thinking, the central problem here is not one of missing notation, precisely because we can already express the intended meaning without any lambda or iota expressions at all. Rather, the fundamental phenomenon is the fact that the strong semantics violates the expected logical principles for the treatment of stipulative definitions.

Does not respect logical equivalence. The strong semantics of iota expressions does not respect logical equivalence. In the integer ring , there are two equivalent ways to express that a number is odd, namely,

These two predicates are equivalent in the integers,

And yet, if we assert “the largest prime number is odd” using these two equivalent formulations, either as or as , then we get opposite truth values. The first formulation is true, as we just previously observed above, but the second is false, because the atomic assertion is false for every particular and so the existential fails. So this is a case where the substitution instance of an iota expression into logically equivalent assertions yields different truth values.

Does not respect instantiation. The strong semantics for iota expressions does not respect universal instantiation. Every structure will declare some instances of false. Specifically, in any structure is true, but if is a failing iota expression, such as , then is false, because this is an atomic assertion with a failing definite description. So we have a false instantiation of a true universal.

The weak semantics for iota expressions

In light of these criticisms, let me describe an alternative semantics for the logic of definite descriptions, a more tentative and hesitant semantics, yet in many respects both reasonable and appealing. Namely, the weak semantics takes the line that in order for an assertion about an individual specified by definite description to be meaningful, the description must in fact succeed in its reference—otherwise it is not meaningful. On this account, for example, the sentence “the largest prime number is odd” is meaningless in the integer ring, without a truth value, and similarly with any further sentence built from this assertion. On the weak semantics, the assertion fails to express a proposition because there is no largest prime number in the integers.

On the weak semantics, we first make a judgement about whether an assertion is meaningful before stating whether it is true or not. As before, an iota expression is undefined in a model at a valuation if there is not a unique fulfilling instance (using the weak semantics recursively in this evaluation). In the atomic case of satisfaction , the weak semantics judges the assertion to be meaningful only if all of the term expressions are defined, and in this case the assertion is true or false accordingly as to whether holds. If one or more of the terms is not defined, then the assertion is judged meaningless. Similarly, in the disquotational recursion through the Boolean connectives, we say that is meaningful only when both conjuncts are meaningful, and true exactly when both are true. And similarly for , , , and . In each case, with the weak semantics we require all of the constituent subformulas to be meaningful in order for the whole expression to be judged meaningful, whether or not the truth values of those assertions could affect the overall truth value.

The compositional theory of truth implicitly defines a well-founded relation on the instances of satisfaction , with each instance reducing via the Tarski recursion to certain relevant earlier instances. In the weak semantics, an assertion is meaningful if and only if every iota term valuation arising hereditarily in the recursive calculation of the truth values is successfully defined.

The choice to use the weak semantics can be understood as a commitment to use robust definite descriptions that succeed in their reference. For meaningful assertions, one should ensure that all the relevant definite descriptions succeed, such as by using robust descriptions with default values in cases of failure, rather than relying on the semantical rules to paper over or fix up the effects of sloppy failed references. Nevertheless, the weak and the strong semantics agree on the truth value of any assertion found to be meaningful. In this sense, being true in the weak semantics is simply a tidier way to be true, one without sloppy failures of reference.

The weak semantics can be seen as a nonclassical logic in that not all instances of the law of excluded middle will be declared true, since if is not meaningful then neither will be this disjunction. But the logic is not intuitionist, since not all instances of are true. Meanwhile, the weak semantics are classical in the sense that in every meaningful instance, the law of excluded middle holds, as well as double-negation elimination.

The natural semantics for iota expressions

The natural semantics is a somewhat less hesitant semantics guided by the idea that an assertion with iota expressions or partially defined terms is meaningful when sufficiently many of those terms succeed in their reference to determine the truth value. In this semantics, we take a conjunction as meaningfully true, if both and are meaningfully true, and meaningfully false if at least one of them is meaningfully false. A disjunction is meaningfully true, if at least one of them is meaningfully true, and meaningfully false if both are meaningful and false. A negation is meaningful exactly when is, and with the opposite truth value. In the natural semantics an implication is meaningfully true if either is meaningful and false or is meaningful and true, and is meaningfully false if is meaningfully true and is meaningfully false. This formulation of the semantics enables a robust treatment of conditional assertions, such as in the ordered real field where we might take division as a partial function, defined as long as the denominator is nonzero. In the natural semantics, the assertion

comes out meaningful and true in the reals, whereas it is not meaningful in the weak semantics because is not defined, which might seem rather too weak because this case arises only when it is also ruled out by the antecedent. A biconditional is meaningful if and only if both and are meaningful, and it is true if these have the same truth value. In the natural semantics, an existential assertion is judged meaningful and true if there is an instance that is meaningful and true, and meaningfully false if every instance is meaningful but false. A universal assertion is meaningfully true if every instance is meaningful and true, and meaningfully false if at least one instance is meaningfully false.

Still no new expressive power

The weak semantics and the natural semantics both address some of the weird aspects of the strong semantics by addressing head-on and denying the claim that assertions made about nonexistent individuals are meaningful. This could be refreshing to someone put off by any sort of claim made about the king of France or the largest prime number in the integers—such a person might prefer to regard these claims as not meaningful. And yet, just as with the strong semantics, the weak semantics and the natural semantics offer no new expressive power to the logic.

Theorem. The language of first-order logic with iota expressions in either the weak semantics or the natural semantics has no new expressive power—for every assertion in the language with iota expressions, there are formulas in the original language expressing that the given formula is meaningful, respectively in the two semantics, and others expressing that it is meaningful and true.

Proof. This can be proved by induction on formulas similar to the proof of the no-new-expressive-power theorem for the strong semantics. The key point is that the question whether a given instance of iota expression succeeds in its reference or not is expressible by means of without using any additional iota terms not already in . By augmenting any atomic assertion in which such an iota expression appears with the assertions that the references have succeeded, we thereby express the meaningfulness of the atomic expression. Similarly, the definition of what it means to be meaningful and what it means to be true in the weak semantics or in the natural semantics was itself defined recursively, and so in this way we can systematically eliminate the iota expressions and simultaneously create formulas asserting the meaningfulness and the truth of any given assertion in the expanded iota language.

Let me next prove the senses in which both the weak and natural semantics survive the criticisms I had mentioned earlier for the strong semantics.

Theorem.

- Both the weak and natural semantics fulfill Russell’s theory of definite descriptions—if an assertion is true, then every definite description relevant for this was successful.

- Both the weak and natural semantics respect Tarski’s compositional theory of truth—if an assertion is meaningful, then its truth value is determined by the Tarski recursion.

- Both the weak and natural semantics respect stipulative definitions—replacing any defined predicate in a meaningful assertion by its definition, if meaningful, preserves the truth value.

- Both the weak and natural semantics respect logical equivalence—if and are logically equivalent ( occuring freely in both) and is any term, then and get the same truth judgement.

- Both the weak and natural semantics respect universal instantiation—if and are both meaningful, then is meaningful and true.

Proof. For statement (1), the notion of relevance here for the weak semantics is that of arising earlier in the well-founded recursive definition of truth, while in the natural semantics we are speaking of relevant instances sufficient for the truth calculation. In either semantics, for an assertion to be declared true, then all the definite descriptions relevant for this fact are successful. A truth judgement is never made on the basis of a failed reference.

Statement (2) is immediate, since both the weak and the natural semantics are defined in a compositional manner.

Statement (3) is proved by induction. If we have introduced predicate by stipulative definition , which is meaningful at every point of evaluation in the model, then whenever a term is defined, the predicate in the model will be meaningful and have the same truth value as . So replacing with in any formula will give the same truth judgement.

For statement (4), suppose that and are logically equivalent, meaning that occurs freely in both assertions and the model gives the same truth judgement to them at every point. If is not defined, then both and are declared meaningless (this is why we need the free variable actually to occur in the formulas). If is defined, then by the assumption of logical equivalence, and will get the same truth value.

Statement (5) follows immediately for the weak semantics, since if and are both meaningful, then in particular, if actually occurs freely in then must be defined in the weak semantics, in which case will follow from . In the natural semantics, if is meaningfully true, then also will be, and if it is meaningfully false, then the implication is meaningfully true.

Deflationary philosophical conclusions

To my way of thinking, the principal philosophical conclusion to make in light of the no-new-expressive-power theorems is that there is nothing at stake in the debate about whether to add iota expressions to the logic or whether to use the strong or weak semantics. The debate is moot, and we can adopt a deflationary stance, because any proposition or idea that we might wish to express with iota expressions and definite descriptions in one or the other semantics can be expressed in the original language. The expansion of linguistic resources provided by iota expressions is ultimately a matter of mere convenience or logical aesthetics whether we are to use them or not. If the logical feature or idea you wish to convey is more clearly or easily conveyed with iota expressions, then go to town! But in principle, they are eliminable.

Similarly, there is little at stake in the dispute between the weak and the strong semantics. In fact they agree on the truth judgements of all meaningful assertions. In light of this, there seems little reason not to proceed with the strong semantics, since it provides coherent truth values to the assertions lacking a truth value in the weak semantics. The criticisms I had mentioned may be outweighed simply by having a complete assignment of truth values. The question of whether assertions made about failed definite descriptions are actually meaningful can be answered by the reply: they are meaningful, because the strong semantics provide the meaning. But again, because every idea that can be expressed in these semantics can also be expressed without it, there is nothing at stake in this decision.

This blog post is adapted from my book-in-progress, Topics in Logic, an introduction to a wide selection of topics in logic for philosophers, mathematicians, and computer scientists.