My Oxford student Emma Palmer and I have been thinking about worldly cardinals and Gödel-Bernays GBC set theory, and we recently came to a new realization.

Namely, what I realized is that every worldly cardinal admits a Gödel-Bernays structure, including the axiom of global choice. That is, if is worldly, then there is a family of sets so that is a model of Gödel-Bernays set theory GBC including global choice.

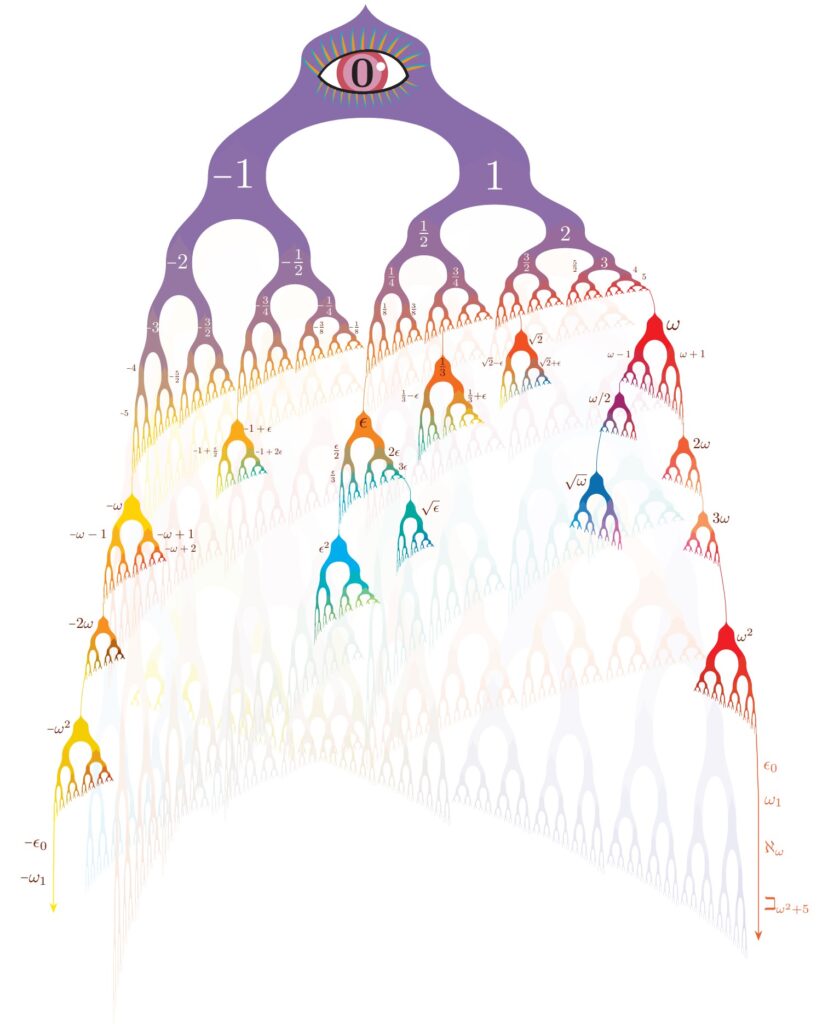

For background, it may be helpful to recall Zermelo’s famous 1930 quasi-categoricity result, showing that the inaccessible cardinals are precisely the cardinals for which is a model of second-order set theory .

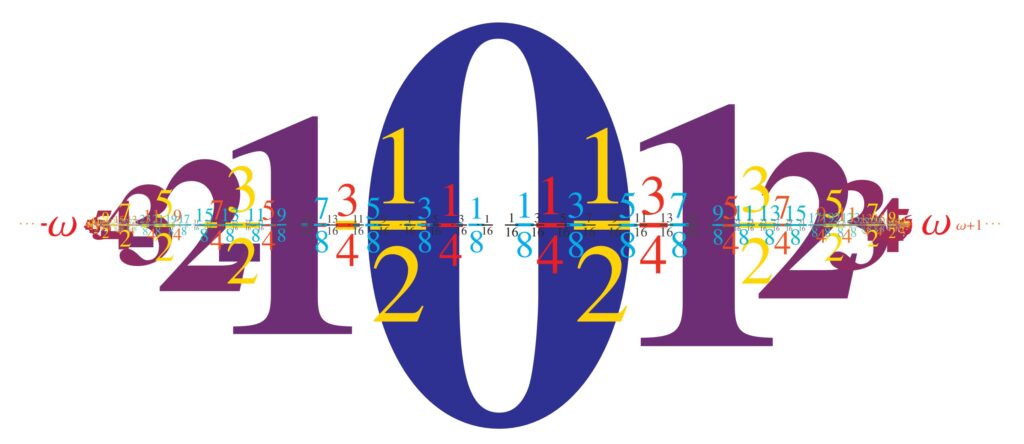

If one seeks only the first-order ZFC set theory in , however, then this is what it means to say that is a worldly cardinal, a strictly weaker notion. That is, is worldly if and only if . Every inaccessible cardinal is worldly, by Zermelo’s result. But more, every inaccessible is a limit of worldly cardinals, and so there are many worldly cardinals that are not inaccessible. The least worldly cardinal, for example, has cofinality . Indeed, the next worldly cardinal above any ordinal has cofinality .

Meanwhile, to improve slightly on Zermelo, we can observe that if is inaccessible, then is a model of Kelley-Morse set theory when equipped with the full second-order complement of classes. That is, is a model of KM.

This is definitely not true when is merely worldly and not inaccessible, however, for in this case is never a model of KM nor even GBC when is singular. The reason is that the singularity of would be revealed by a short cofinal sequence, which would be available in the full power set , and this would violate replacement.

So the question is:

Question. If is worldly, then can we equip with a suitable family of classes so that is a model of GBC?

The answer is Yes!

What I claim is that for every worldly cardinal , there is a definably generic well order of , so that the subsets definable in make a model of GBC.

To see this, consider the class forcing notion for adding a global well order , as sees it. Conditions are well orders of some for some , ordered by end-extension, so that lower rank sets always preceed higher rank sets in the resulting order.

I shall prove that there is a well-order that is generic with respect to dense sets definable in .

For this, let us consider first the case where the worldly cardinal has countable cofinality. In this case, we can find an increasing sequence cofinal in , such that each is -correct in , meaning .

In this case, we can build a definably generic filter for in a sequence of stages. At stage , we can find a well order up to that meets all definable dense classes using parameters less than . The reason is that for any such definable dense set, we can meet it below using the -correctness of , and so by considering various parameters in turn, we can altogether handle all parameters below using definitions. That is, the th stage is itself an iteration of length , but it will meet all definable dense sets using parameters in .

Next, we observe that the ultimate well-order of that arises from this construction after all stages is fully definably generic, since any definition with arbitrary parameters in is a definition with parameters in for some large enough , and so we get a definably generic well order . Therefore, the usual forcing argument shows that we get GBC in the resulting model , as desired.

The remaining case occurs when kappa has uncountable cofinality. In this case, there is a club set of ordinals with . (We can just intersect the clubs of the -correct cardinals.) Now, we build a well-order of that is definably generic for every for . At limits, this is free, since every definable dense set in V_lambda with parameters below is also definable in some earlier . So it just reduces to the successor case, which we can get by the arguments above (or by induction). The next correct cardinal above any ordinal has countable cofinality, since if one considers the next -correct cardinal, the next -correct cardinal, and so on, the limit will be fully correct and cofinality .

The conclusion is that every worldly cardinal admits a definably generic global well-order on and therefore also admits a Gödel-Bernays GBC set theory structure , including the axiom of global choice.

The argument relativizes to any particular amenable class . Namely, if is a model of , then there is a definably generic well order of such that is a model of , and so by taking the classes definable from and , we get a GBC structure including both and .

This latter observation will be put to good use in connection with Emma’s work on the Tarski’s revenge axiom, in regard to finding the optimal consistency strength for one of the principles.