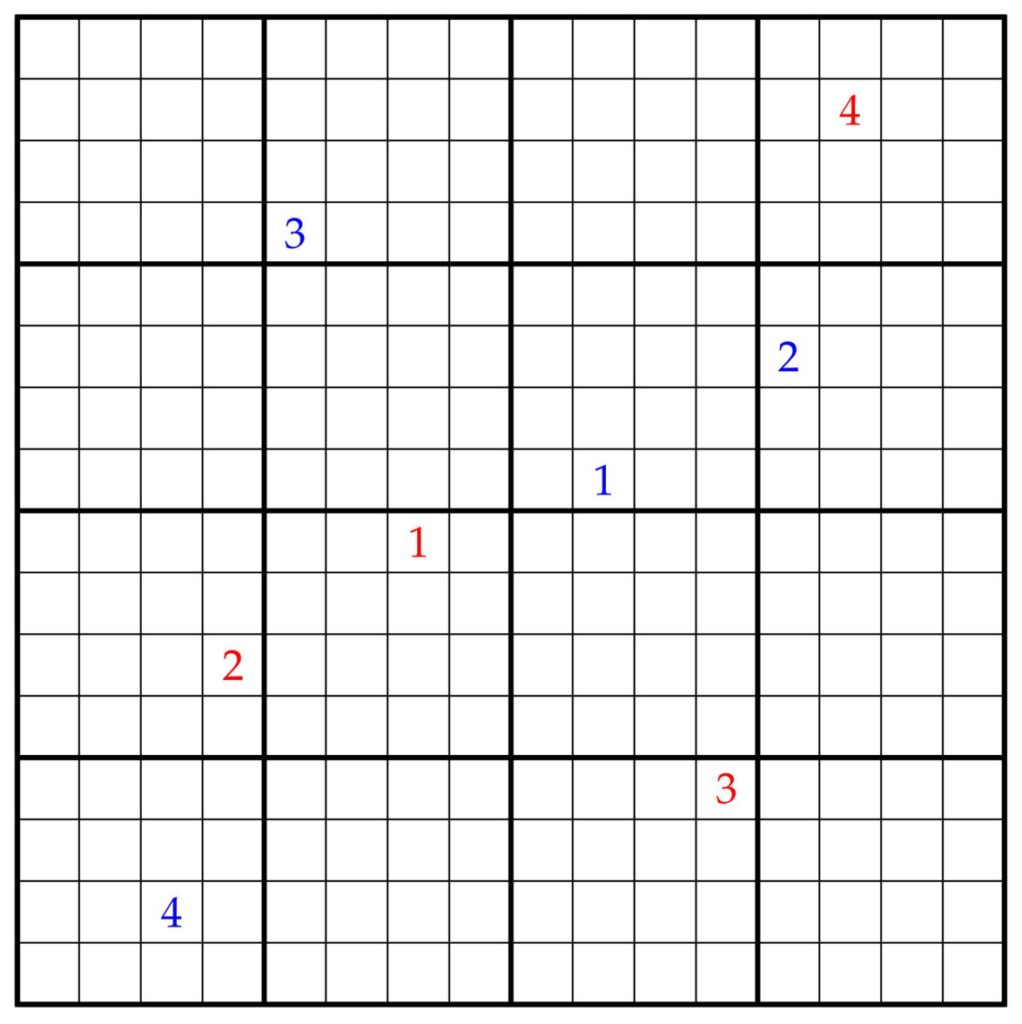

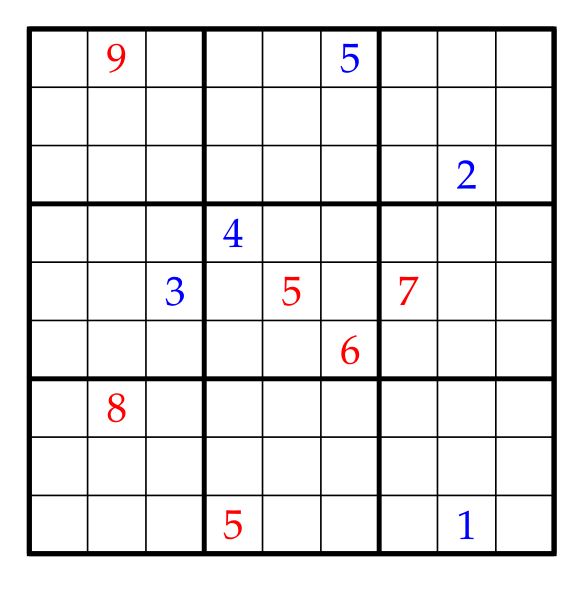

Consider what I call the Sudoku game, recently introduced in the MathOverflow question Who wins two-player Sudoku? posted by Christopher King (user PyRulez). Two players take turns placing numbers on a Sudoku board, obeying the rule that they must never explicitly violate the Sudoku condition: the numbers on any row, column or sub-board square must never repeat. The first player who cannot continue legal play loses. Who wins the game? What is the winning strategy?

The game is not about building a global Sudoku solution, since a move can be legal in this game even when it is not part of any global Sudoku solution, provided only that it doesn’t yet explicitly violate the Sudoku condition. Rather, the Sudoku game is about trying to trap your opponent in a maximal such position, a position which does not yet explicitly violate the Sudoku condition but which cannot be further extended.

In my answer to the question on MathOverflow, I followed an idea suggested to me by my daughter Hypatia, namely that on even-sized boards $n^2\times n^2$ where $n$ is even, then the second player can win with a mirroring strategy: simply copy the opponent’s moves in reflected mirror image through the center of the board. In this way, the second player ensures that the position on the board is always symmetric after her play, and so if the previous move was safe, then her move also will be safe by symmetry. This is therefore a winning strategy for the second player, since any violation of the Sudoku condition will arise on the opponent’s play.

This argument works on even-sized boards precisely because the reflection of every row, column and sub-board square is a totally different row, column and sub-board square, and so any new violation of the Sudoku conditions would reflect to a violation that was already there. The mirror strategy definitely does not work on the odd-sized boards, including the main $9\times 9$ case, since if the opponent plays on the central row, copying directly would immediately introduce a Sudoku violation.

After posting that answer, Orson Peters (user orlp) pointed out that one can modify it to form a winning strategy for the first player on odd-sized boards, including the main $9\times 9$ case. In this case, let the first player begin by playing $5$ in the center square, and then afterwards copy the opponent’s moves, but with the ten’s complement at the reflected location. So if the opponent plays $x$, then the first player plays $10-x$ at the reflected location. In this way, the first player can ensure that the board is ten’s complement symmetric after her moves. The point is that again this is sufficient to know that she will never introduce a violation, since if her $10-x$ appears twice in some row, column or sub-board square, then $x$ must have already appeared twice in the reflected row, column or sub-board square before that move.

This idea is fully general for odd-sized Sudoku boards $n^2\times n^2$, where $n$ is odd. If $n=2k-1$, then the first player starts with $k$ in the very center and afterward plays the $2k$-complement of her opponent’s move at the reflected location.

Conclusion.

- On even-sized Sudoku boards, the second player wins the Sudoku game by the mirror copying strategy.

- On odd-sized Sudoku boards, the first players wins the Sudoku game by the complement-mirror copying strategy.

Note that on the even boards, the second player could also play complement mirror copying just as successfully.

What I really want to tell you about, however, is the infinite Sudoku game (following a suggestion of Sam Hopkins). Suppose that we try to play the Sudoku game on a board whose subboard squares are $\mathbb{Z}\times\mathbb{Z}$, so that the full board is a $\mathbb{Z}\times\mathbb{Z}$ array of those squares, making $\mathbb{Z}^2\times\mathbb{Z}^2$ altogether. (Or perhaps you might prefer the board $\mathbb{N}^2\times\mathbb{N}^2$?)

One thing to notice is that on an infinite board, it is no longer possible to get trapped at a finite stage of play, since every finite position can be extended simply by playing a totally new label from the set of labels; such a move would never lead to a new violation of the explicit Sudoku condition.

For this reason, I should like to introduce the Sudoku Solver-Spoiler game variation as follows. There are two players: the Sudoku Solver and the Sudoku Spoiler. The Solver is trying to build a global Sudoku solution on the board, while the Spoiler is trying to prevent this. Both players must obey the Sudoku condition that labels are never to be explicitly repeated in any row, column or sub-board square. On an infinite board, the game proceeds transfinitely, until the board is filled or there are no legal moves. The Solver wins a play of the game, if she successfully builds a global Sudoku solution, which means not only that every location has a label and there are no repetitions in any row, column or sub-board square, but also that every label in fact appears in every row, column and sub-board square. That is, to count as a solution, the labels on any row, column and sub-board square must be a bijection with the set of labels. (On infinite boards, this is a stronger requirement than merely insisting on no repetitions.)

The Solver-Spoiler game makes sense in complete generality on any set $S$, whether finite or infinite. The sub-boards are $S^2=S\times S$, and one has an $S\times S$ array of them, so $S^2\times S^2$ for the whole board. Every row and column has the same size as the sub-board square $S^2$, and the set of labels should also have this size.

Upon reflection, one realizes that what matters about $S$ is just its cardinality, and we really have for every cardinal $\kappa$ the $\kappa$-Sudoku Solver-Spoiler game, whose board is $\kappa^2\times\kappa^2$, a $\kappa\times\kappa$ array of $\kappa\times\kappa$ sub-boards. In particular, the game $\mathbb{Z}^2\times\mathbb{Z}^2$ is actually isomorphic to the game $\mathbb{N}^2\times\mathbb{N}^2$, despite what might feel initially like a very different board geometry.

What I claim is that the Solver has a winning strategy in the all the infinite Sudoku Solver-Spoiler games, in a very general and robust manner.

Theorem. For every infinite cardinal $\kappa$, the Solver has a winning strategy to win the $\kappa$-Sudoku Solver-Spoiler game.

- The strategy will win in $\kappa$ many moves, producing a full Sudoku solution.

- The Solver can win whether she goes first or second, starting from any legal position of size less than $\kappa$.

- The Solver can win even when the Spoiler is allowed to play finitely many labels at once on each turn, or fewer than $\kappa$ many moves (if $\kappa$ is regular), even if the Solver is only allowed one move each turn.

- In the countably infinite Sudoku game, the Solver can win even if the Spoiler is allowed to make infinitely many moves at once, provided only that the resulting position can in principle be extended to a full solution.

Proof. Consider first the countably infinite Sudoku game, and assume the initial position is finite and that the Spoiler will make finitely many moves on each turn. Consider what it means for the Solver to win at the limit. It means, first of all, that there are no explicit repetitions in any row, column or sub-board. This requirement will be ensured since it is part of the rules for legal play not to violate it. Next, the Solver wants to ensure that every square has a label on it and that every label appears at least once in every row, every column and every sub-board. If we think of these as individual specific requirements, we have countably many requirements in all, and I claim that we can arrange that the Solver will simply satisfy the $n^{th}$ requirement on her $n^{th}$ play. Given any finite position, she can always find something to place in any given square, using a totally new label if need be. Given any finite position, any row and any particular label $k$, since can always find a place on that row to place the label, which has no conflict with any column or sub-board, since there are infinitely many to choose from and only finitely many conflicts. Similarly with columns and sub-boards. So each of the requirements can always be fulfilled one-at-a-time, and so in $\omega$ many moves she can produce a full solution.

The argument works equally well no matter who goes first or if the Spoiler makes arbitrary finite play, or indeed even infinite play, provided that the play is part of some global solution (perhaps a different one each time), since on each move the Solve can simply meet the requirement by using that solution at that stage.

An essentially similar argument works when $\kappa$ is uncountable, although now the play will proceed for $\kappa$ many steps. Assuming $\kappa^2=\kappa$, a consequence of the axiom of choice, there are $\kappa$ many requirements to meet, and the Solve can meet requirement $\alpha$ on the $\alpha^{th}$ move. If $\kappa$ is regular, we can again allow the Spoiler to make arbitrary size-less-than-$\kappa$ size moves, so that at any stage of play before $\kappa$ the position will still be size less than $\kappa$. (If $\kappa$ is singular, one can allow Spoiler to make finitely many moves at once or indeed even some uniform bounded size $\delta<\kappa$ many moves at once. $\Box$

I find it interesting to draw out the following aspect of the argument:

Observation. Every finite labeling of an infinite Sudoku board that does not yet explicitly violate the Sudoku condition can be extended to a global solution.

Similarly, any size less than $\kappa$ labeling that does not yet explicitly violate the Sudoku condition can be extended to a global solution of the $\kappa$-Sudoku board for any infinite cardinal $\kappa$.

What about asymmetric boards? It has come to my attention that people sometimes look at asymmetric Sudoku boards, whose sub-boards are not square, such as in the six-by-six Sudoku case. In general, one could take Sudoku boards to consist of a $\lambda\times\kappa$ array of sub-boards of size $\kappa\times\lambda$, where $\kappa$ and $\lambda$ are cardinals, not necessarily the same size and not necessarily both infinite or both finite. How does this affect the arguments I’ve given?

In the finite $(n\times m)\times (m\times n)$ case, if one of the numbers is even, then it seems to me that the reflection through the origin strategy works for the second player just as before. And if both are odd, then the first player can again play in the center square and use the mirror-complement strategy to trap the opponent. So that analysis will work fine.

In the case $(\kappa\times\lambda)\times(\lambda\times\kappa)$ where $\lambda\leq\kappa$ and $\kappa=\lambda\kappa$ is infinite, then the proof of the theorem seems to break, since if $\lambda<\kappa$, then with only $\lambda$ many moves, say putting a common symbol in each of the $\lambda$ many rectangles across a row, we can rule out that symbol in a fixed row. So this is a configuration of size less than $\kappa$ that cannot be extended to a full solution. For this reason, it seems likely to me that the Spoiler can win the Sudoko Solver-Spoiler game in the infinite asymmetric case.

Finally, let’s consider the Sudoku Solver-Spoiler game in the purely finite case, which actually is a very natural game, perhaps more natural than what I called the Sudoku game above. It seems to me that the Spoiler should be able to win the Solver-Spoiler game on any nontrivial finite board. But I don’t yet have an argument proving this. I asked a question on MathOverflow: The Sudoku game: Solver-Spoiler variation.

![By Alfred Le Petit (1841-1909) ([1]) [Public domain], via Wikimedia Commons](http://jdh.hamkins.org/wp-content/uploads/2018/03/Alfred_Le_Petit_-_Autoportrait_1893.jpg)